What are hybrid CPUs? Hybrid CPUs are CPUs which have fast, but power hungry cores and slower but less energy consuming cores integrated into a CPU. All consumer grade desktop CPUs from Intel since Alder Lake (CPU number 12xxx or greater which was released in 2022) are hybrid CPUs. If you have recently bought an Intel CPU the chances are nearly 100% that you have bought a hybrid CPU.

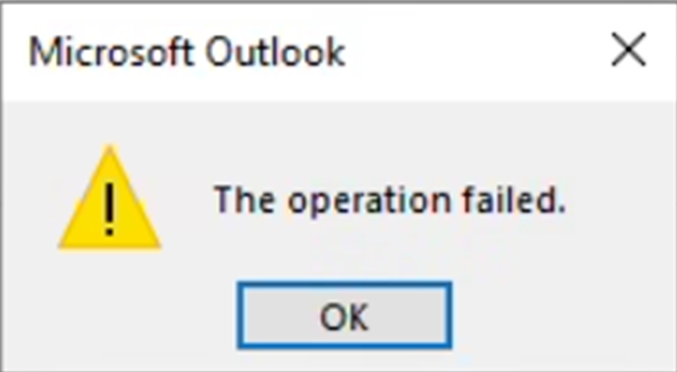

In theory, everything should run faster with newer CPUs. But in practice, there are issues, if you want to run software which was not prepared for hybrid CPUs. E.g. if you need to replace old machines with newer ones which run your software on hybrid CPUs which still needs Windows 10.

There are many great reviews online which talk about the performance of hybrid CPUs on Windows. Some have also noticed that there are issues with processes with below normal priority:

Intel gave an example of a content creator, exporting a video, and while that was processing going to edit some images. This puts the video export on the efficiency cores, while the image editor gets the performance cores. In my experience, the limiting factor in that scenario is the video export, not the image editor – what should take a unit of time on the P-cores now suddenly takes 2-3x on the E-cores while I’m doing something else. This extends to anyone who multi-tasks during a heavy workload, such as programmers waiting for the latest compile. Under this philosophy, the user would have to keep the important window in focus at all times. Beyond this, any software that spawns heavy compute threads in the background, without the potential for focus, would also be placed on the E-cores.

Personally, I think this is a crazy way to do things, especially on a desktop. Intel tells me there are three ways to stop this behavior:

From https://www.anandtech.com/show/17047/the-intel-12th-gen-core-i912900k-review-hybrid-performance-brings-hybrid

- Running dual monitors stops it

- Changing Windows Power Plan from Balanced to High Performance stops it

- There’s an option in the BIOS that, when enabled, means the Scroll Lock can be used to disable/park the E-cores, meaning nothing will be scheduled on them when the Scroll Lock is active.

Many CPU hungry number crunchers like compilers, video editing, ray tracing and other software are trying to be nice to the user and lower the priority of their threads or computation child processes to let the user experience a still fluent system, although the CPU is doing hard work on all cores. That approach has worked for a long time pretty well, but it has some unintended side effects which are not well understood when hybrid CPUs enter the game.

Windows 10

Let’s do a simple experiment. We will use Windows 10 and CPU Stress from Sysinternals where we create 7 threads which run inside a Below Normal process. I use 7 because some things might already be running, so I give it some headroom on the 8th E-Core.

When we observe the CPU load in Task Manager we find that all threads of a Below Normal process run on the E-Cores only (the last 8 ones for I7-13700). If we raise the process priority back to normal, the load is scheduled on the P cores and all is fast if the load was e.g. an expensive compile or video editing step.

But we can achieve the same effect by simply selecting the CPU Stress window. That will cause Windows to raise the CPU priority of the foreground process, and suddenly mitigation option 1 mentioned by Dr. Ian Cutress makes sense. If the application has multiple processes which form a UI you might just put the focus on the right one to speed up things again.

Disabling the E-Cores (Mitigation 3) is possible in some but, not in all BIOS versions. What most offer is that you can forcefully park the E-cores, so no work is scheduled to them. In my BIOS this is called Legacy Game Mode which is activated with the Scroll Lock hot key.

That fix does work but you will need to remember always to toggle the hotkey to turn off the E-Cores. Besides the usability issue the main problem is that real multithreaded applications which utilize e.g. all cores will experience large slowdowns because they suffer now from thread oversubscription. The application sees 20 cores (e.g. I5-13600K) but due to core parking 20 threads are squeezed on 12 cores which means that 8 threads will compete to run on the same cores leading to thread contention.

Power Plans

Switching to the High Performance Power Plan (Mitigation option 2) works. What is not explained why this works. Or what are the differences between the Power plans on Windows? On the surface it looks easy. You have 3 default plans

- Power Saver

- Balanced

- High Performance

and you can choose one of them.

By default there are only two settings visible in the UI

- Minimum/Maximum Processor State

and that’s it. But in reality on a current Windows 11 (23H2) machine you have 75 different settings. Are all of them relevant? No. By far most of the settings are from Intel which configure SpeedStep/SpeedShift settings for quicker voltage/frequency changes than the OS is able to do when the CPU load changes.

That are many settings. What are the differences between the three main power plans? I have updated ETWAnalyzer to answer that question.

To dump all files with the file name Win10 printing only the properties which have differences

ETWAnalyzer -dump power -fd win10 -diffThen you get a nice list of the changes. Now we also know why changing the Power profile from Balanced to High Performance will solve the E-Core oversubscription issue:

The High Performance Profile has as Hetero Thread Scheduling Policy PreferPerformantProcessors enabled instead of Automatic which apparently means prefer E-cores. That’s the setting which fixes the Low priority process CPU problem on Windows 10.

Since we can compare power profiles it is now also easy to compare them between Windows 10 and 11 by exporting the data to CSV with ETWAnalyzer. After having a lot of columns I just needed to transpose the table and arrived at this:

Orange marked entries are new on Windows 11 which did not exist on Windows 10. Red fields are values which have changed in Windows 11. There are quite a few changes visible, but only a few of them make a measurable difference with simple load tests.

E.g. the main settings which changes everything between Balanced and Power Saver is the MaxThrottleFrequency % Class 1 or Maximum processor state for Processor Power Efficiency Class 1 which defines how high the P-Cores can boost. The Power Saver profile sets that to 75%. Similar is the MaxEfficiencyFrequency MHz Class 1 or Maximum Processor frequency for Processor Power Efficiency Class 1 setting, which let’s you control the P-core frequency directly. If set, it will override the MaxThrottleFrequency % setting.

Windows 10/11 Scheduling Differences

The main knobs in the power profile are

- Heterogenous policy in effect

- Values 0-4

- Default is 4 on Windows 10

- 0 Windows 11, except for Power Saver profile where it is 4.

- Heterogenous thread scheduling policy

- Values 0-5

- Default is Automatic on Windows 11

- PreferPerformantProcessors or Automatic on Windows 10 depending on used Power Profile

- 0=AllProcessors

- 1=PerformantProcessors

- 2=PreferPerformant

- 3=EfficientProcessors

- 4=PreferEfficient

- 5=Automatic

- Values 0-5

I have created a simple load test which runs on 12 cores for 10s which runs at 3 process priorities (BelowNormal, Normal, AboveNormal). Then I configure in a script all possible combinations for both polices and let them run. Here is the code of my simple LoadStress app:

namespace LoadStress

{

internal class Program

{

static void Main(string[] args)

{

var total = Stopwatch.StartNew();

Action acc = () =>

{

var sw = Stopwatch.StartNew();

while (sw.Elapsed.TotalSeconds < 10)

{

}

};

if( args.Length < 2 )

{

Console.WriteLine("LoadStress [Idle, BelowNormal,Normal, AboveNormal, High] nThreads");

return;

}

string prio = args[0];

if( !Enum.TryParse(prio, true, out ProcessPriorityClass prioEnum) )

{

Console.WriteLine("Process Priority can be Idle, BelowNormal, Normal, AboveNormal, High");

return;

}

Process.GetCurrentProcess().PriorityClass = prioEnum;

int threads = int.Parse(args[1]);

var delegates = Enumerable.Repeat(acc, threads).ToArray();

Parallel.Invoke(delegates);

total.Stop();

Console.WriteLine($"Did start {threads} threads in process with priority {prioEnum} in {total.Elapsed.TotalSeconds:F1} s");

}

}

}

The key insight I did use was that I want to measure CPU scheduling and not CPU performance. I simply start n threads and do a while < 10s busy loop which is independent of the CPU frequency and type. That way I will always consume the same amount of CPU time regardless on which CPU type I am running I know that one thread will have consumed, if no thread contention did show up, 10 s.

One run will modify the current power profile (I did use Balanced) and ran the LoadStress application.

powercfg /SETACVALUEINDEX SCHEME_CURRENT SUB_PROCESSOR HETEROPOLICY 3

powercfg /SETACVALUEINDEX SCHEME_CURRENT SUB_PROCESSOR SCHEDPOLICY 1

powercfg /SETACTIVE SCHEME_CURRENT

LoadStress.exe BelowNormal 12

The test application consumes 120 s of CPU. Both tested CPUs (I5 13600 and I7 13700) have 8 E-cores and 8 and 6 P-cores with Hyper Threading enabled resulting in a total core count of 20 and 24. What is the most performant thread scheduling for 12 threads? In that case 8 P-cores should be used and 4 E-cores, or just P-cores are preferred then the load goes completely on 12 P-cores. Since E-cores are by a factor 2-3x slower than the P-cores, but if you put concurrent load on Hyper threaded P-cores then you are also not getting much faster than on a single P-core. In the end it depends on the specific load type if you gain anything from mixed P/E-core scheduling or not.

Based on that I would expect a total CPU consumption of 120 s if all is optimal 80 s load on the P-Cores and up to 40 s on the E-cores if the hyper threaded cores are skipped in favor of the E-cores.

If the combined CPU consumption of P/E-cores is below 120 s we have thread starvation issues because OS schedules multiple threads onto the same core leading to thread contention. If you are reading this you are usually aiming at 80-120 s of CPU spent on the P-cores and the remaining 40-0 s spent on the E-cores. Let’s check how Windows 10 and 11 behave when we change the settings and use either the Balanced or the High Performance profile as base profile while we are switching Hetero Policy and Hetero Thread Policy and Process Priority. This is already quite a matrix of 5*6*3*2 = 180 combinations which is difficult to visualize with any chart type. In the end I opted for a simple table with embedded graphs over the actual values which gives most insights.

If you aim to force the workload on Windows 10 of Below Normal processes on the P-cores you should use Hetero 3 which prefers Below Normal processes on the P-cores with the highest value of 110s/120s.

Using the High Performance profile as it was suggested by Dr Ian Cutress solves the problem only partially which use Hetero 4 and Hetero Thread scheduling PerformantProcessors. The many other configuration knobs did not make a measurable difference for our synthetic non spiked load.

Normally we would expect a total CPU of 120 s running on 12 or more cores whic is nicely visualized in WPA by logical CPU number.

But sometimes on Windows 10 strange things happen when we confine Hetero Thread scheduling to Efficiency or Performance cores. In this case not 12 cores were used but only 2 during the 10s runtime with 12 threads:

Why this is happening I am not sure. If you use the default Windows 10 policy Hetero 4 and confine the load via Hetero Thread scheduling on specific core types you can be penalized by Windows 10 scheduler. See Windows 10 – Hetero 4 – PerformantProcessors for AboveNormal and Normal processes where we get in the worst case only one core assigned during application runtime. Why is this an issue?

Because these are the standard settings for the High Performance Power Profile, which is the recommended solution as mitigation for the E-core preference in the Balanced Power profile! My tests prove that the recommended solution might make matters worse on Windows 10.

The used Hetero Profiles 0-4 are not documented by MS, but Intel did publish for 0 and 4 some documentation:

Setting Value: 0 (i.e., Standard Parking or Favored Core

Parking)

In this configuration, the optimum set of compute cores are unparked starting with the most

performant cores first.SettingValue: 4 (i.e., Hetero Parking)

https://www.intel.com/content/dam/develop/external/us/en/documents-tps/348851-optimizing-x86-hybrid-cpus.pdf

In this configuration, based off utilization, a combination of most performant or most efficient cores

are unparked first.

In certain scenarios like low power envelope SKUs or better battery life goals, it can be more efficient

to run low utilization work on cores with higher efficiency capability at efficient frequency. This policy

is used in these scenarios in combination with optimal performance state engine settings.

Based on my measurements we can summarize the scheduling behavior of the 5 Hetero Profiles on Windows 10 with

- Hetero 0 Distribute load accross all cores with a preference on P-cores (Default on Windows 11).

- Hetero 1 Same as 0.

- Hetero 2 Confine work entirely to E-cores

- Hetero 3 Distribute load on P-cores stronger than Hetero 0, but allow E-core usage.

- Hetero 4 Prefer E-cores for low priority tasks and constrain load on some core types when PerformantProcessors or EfficientProcessors are used as Hetero Thread scheduling option (Default on Windows 10).

So what is best? Hetero Thread Scheduling Automatic will load our Below Priority process to the E-cores which is the default in Balanced Power Profile. The numbers look stable, just the wrong cores are used:

Now go back to the High Performance Profile defaults

We get P-cores but erratic scheduling where due to unkown reasons not all needed 12 P-cores are utilized. This issue happens sporadically and not all the time. If you have short running tests all will look fine but if you let the 10s LoadTester run 20 times you find erratic scheduling. Based on measurments I would say this is a bug in the Kernel Scheduler which is affecting CPU heavy workloads which use many cores. If the load is constant then it will settle after some time but the ramp up phase until the kernel gives all needed cores is many seconds as the tests have shown. This issue should hurt short lived processes which employ many threads on Windows 10 on hybrid CPUs with the High Performance Power profile.

Solution

Currently the best way out seems to use

- Hetero Policy 3

- Hetero Thread Policy AllProcessors

This will schedule first all P-cores and then will overflow the rest to the E-cores which should give you a better mix than the default random shuffling which sometimes happens with the default profiles. Background processes are not unimportant to the user just because they have lowe priority. Perhaps they are running the video AI enhancement or compile step in the background just because they do not want to freeze your machine and lower the process priority.

Windows 11

So far I have talked mostly about Windows 10. What about Windows 11? The results are much less dramatic thanks to the Intel Thread Director. From the numbers it looks like there is not much difference between the Hetero settings at all. The only impactful setting which is strictly adhered to is the Hetero Thread Policy EfficientProcessors which will confine the load entirely on the E-cores. A deeper inspection will need to wait for another day. Since this beast is quite smart, it detects specific cpu instructions like pause/mwait and other things which consume cycles but do not need to be fast which can safely be put to E-cores, a lot of different workloads would need to be tested which is outside the scope for a simple blog post.

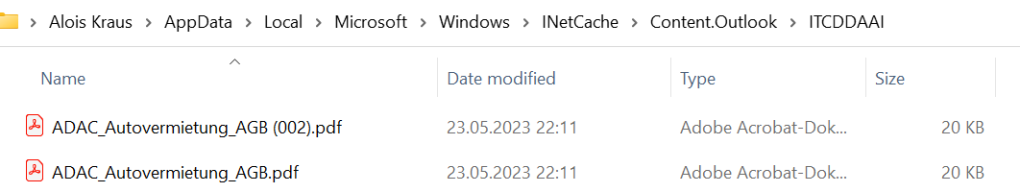

Conclusions

I do not want to believe. I want to measure things and verify if the claims are true. Profiling with ETW is extremely helpful to find later what did cause unexpected anomalies during measurements. In total I did generate 600 ETW files with a size of 90 GB. After extraction with ETWAnalyzer 800 MB of Json files were left, which are much quicker to query than to open and look at 600 files with WPA. To find e.g. all .etl files which have scheduling issues with specific settings I just need to query all LoadStress processes and filter for processes which have CPU > 115 s. All files with smaller CPU will show up as 0 CPU which are the interesting ones. Good naming of the files is essential to make such an approach work. But once you have the data it is easy:

EtwAnalyzer -dump CPU -pn LoadStress -ShowTotal Total -fd *_Normal* -MinMaxCPUMs 115s

That simple query immediately shows that the Windows 11 thread scheduling is rather unspectacular in terms of thread contention. The only case where thread contention happens is when the load is confined to efficient processors. That would be the case for our load because we use 12 threads, but we have only 8 E-cores.

Power profile settings are a deeply underexplored topic where are many myths are circulating in the (gaming) forums. I hope that the provided tooling will serve as a foundation for future quantitative measurements performed by other people. Many people seem to like to change some switches and believe they have solved a performance problem. Without measuring you will never be able to tell. Your gut feeling is not exact enough to serve as foundation to solve performance issues. Only by measuring and visualizing the previously unknown, you will find new patterns and correlations which will help to understand your system better. Your mental performance model is always too simple compared to what is really happening inside your computer.

That’s all for today. Now start measuring at your own!