See Update

With .NET Framework 4.7.2 out of the door it was time to update my Serialization Performance test suite (https://github.com/Alois-xx/SerializerTests). There have been many serializers added since the article https://aloiskraus.wordpress.com/2017/04/23/the-definitive-serialization-performance-guide/ was written which warrants a post on its own. The performance numbers were updated but not all of the text.

First of all the pesky BinaryFormatter O(n^2) issue is gone with .NET 4.7.2 if you add to your App.Config

<?xml version="1.0" encoding="utf-8"?> <configuration> <runtime> <!-- Use this switch to make BinaryFormatter fast with large object graphs starting with .NET 4.7.2 --> <AppContextSwitchOverrides value="Switch.System.Runtime.Serialization.UseNewMaxArraySize=true" /> </runtime> </configuration>

.NET Core does not need such a setting because it did contain the fixed BinaryFormatter from the start which was added to .NET Core 2.0. The de/serialization performance of .NET Core (2.0.6) is not faster compared to the .NET Framework. You might ask: How can that be? .NET Core is performance obsessed and now it is slower? The answer is that not .NET Core is slower but some serializers targeting .NET Standard execute workarounds for early .NET Core versions. As always you should measure in your actual target environment to prevent bad surprises.

Which Serializers Are Slower under .NET Core?

Most notably MsgPack.Cli, ServiceStack.Text, BinaryFormatter and Bois perform significantly worse on .NET Core. MsgPack.Cli is over two times slower on .NET Core! How does this look under a CPU profiler?

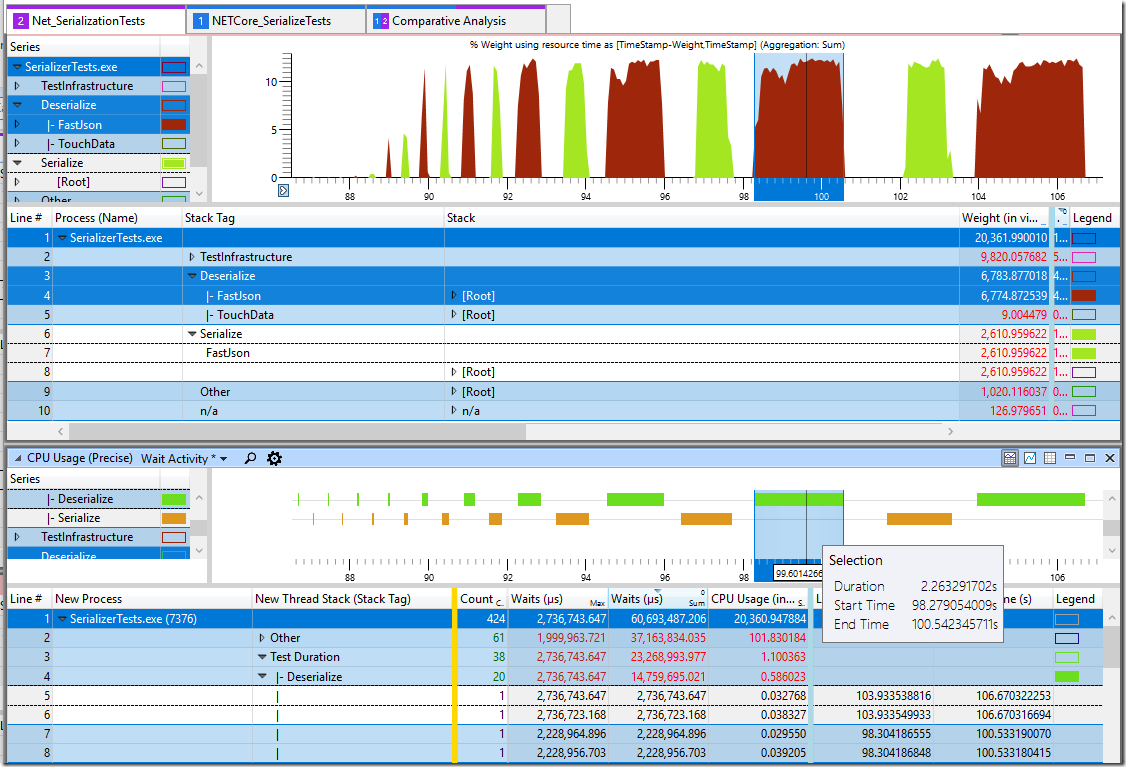

I have recorded two profiling sessions. One for .NET Framework and the other for .NET Core. Trace #1 is .NET Core and Trace #2 is the full .NET Framework. The visible WPA tab is a comparative diff view where the graph shows .NET Core and the table contains the diff values. To read the values you need to know that the displayed values are calculated by subtracting Trace #2 from Trace #1 for each row. Negative values mean that .NET Core consumed more CPU relative to the .NET Framework tests. The Weight column shows the CPU time difference in ms with , as thousand separator and . as decimal point. I know that is a lot of information but once you get used to that level of detail you will never go back to simple timing based tests where you wonder why the timing always fluctuates. Here I have dissected the worse performing serializers under WPA:

What is the reason for that? If one factors out Reflection Get/Set Value and Activator.CreateInstance from the profiled data we get a delta which is within the error margin of ca. 10%. The delta table below now has no longer large deserialization time differences. All the differences come from many calls to FieldInfo.Get/SetValue and Activator.CreateInstance. This is not the case if the same serializers (same serializer but different dll and hence different code!) are running on the regular .NET Framework.

The key takeaway is that the mentioned serializers switch to good old slow Reflection and Activator.CreateInstance calls if you use the .NET Standard version of these serializers. This also includes BinaryFormatter which is 20% slower on .NET Core compared to the full .NET Framework.

Designed To Be Profiled

These are nice graphs which can tell you a lot about an application. With Stack Tags you can compare the CPU consumption of an exe and a hosted dll which executes the same code on different runtimes where a difference by call stack drill down would be useless because even the Main methods are located in different dlls. But by extracting the relevant information from your application logic into logical groups you can compare runtime and resource consumption between a SerializerTests.exe and a dotnet SerializerTests.dll call with no problems. The stack tag file used for these views are part of my Serializer test suite at https://github.com/Alois-xx/SerializerTests/blob/master/SerializerStack.stacktags if you are interested.

The format of the .stacktag files is pretty simple

<?xml version="1.0" encoding="utf-8"?> <!-- This is a Stacktag file for WPA to analyze the performance of serializers under ETW profiling It allows easy comparison of .NET and .NET Core profiling data --> <Tag Name=""> <Tag Name="Deserialize"> <Tag Name="BinaryFormatter"> <Entrypoint Module="SerializerTests.*" Method="SerializerTests.Serializers.BinaryFormatter*::Deserialize*"/> </Tag>

To be able to compare .NET and .NET Core the dll is SerializerTests.* because on .NET it compiles to SerializerTests.exe and on .NET Core to SerializerTests.dll which is executed by the dotnet.exe process. If you compare the CPU Time of the profiling data with the actual test duration from the CSV file which is also created you will find that the test duration is always longer. Even worse it is pretty hard to zoom into the section of time where the test actually executes. The advantage of creating a test suite is that you can make it profiling friendly. The solution to the timing problem is to use an extra thread that starts waiting for an event when the test starts and it becomes signaled when the test has stopped. That way we get a Context Switch event for each test run and we can also visualize the thread wait time for each and every test run.

The graph below shows the CPU consumption for the deserialize tests of all tested formatters. The formatters are executed from fast to slow (in rough order).

The magic happens in the CPU Usage (Precise) view with our custom stacktags we can visualize the test duration as bar chart with the nice alternating pattern. If one test has a strange runtime between test runs and we have profiling data we can now drill into each and every test case and do a full root cause analysis. Looking at a specific profiler test run is now as easy as zooming into the right test run and check out what did take so long:

And The Winners Are

The fastest De/Serializer is MessagePack-CSharp (https://github.com/neuecc/MessagePack-CSharp) from Yoshifumi Kawai which beats Protobuf by a factor >2.5 and GroBuf (https://github.com/skbkontur/GroBuf) from Andrew Kostousov! I have no idea how these guys did make it so fast but this is the fastest C# code I have seen so far. MessagePack-CSharp even comes with Code Analyzers to make the annotation of your existing objects easy.

The problem with such fast serializers is that you cannot serialize object cycles (StackoverFlowException with MessagePack-CSharp, GroBuf and Wire). Another problem is that they do not keep object identity (Wire has an opt in flag see SerializerOptions(preserveObjectReferences). If your objects contain 100 references to the same 1 MB string it will be serialized 100 times by value without preserving object identity and you end up with 100×1 MB strings. As a general rule you need to pay attention not only to performance but also its concrete feature set. If you cannot guarantee that your objects never contain object cycles your application will crash hard without any further notice when you are using such performance optimized libraries. But if you design a reasonable data structure then MessagePack-CSharp or GroBuf are hot options. Yoshifumi Kawai did also create ZeroFormatter which has the crazy property of having 0 deserialization time. The reason why ZeroFormatter is not showing up in the winners section as well is that it sort of cheats the normal benchmarks. ZeroFormatter creates proxy objects on the fly which only contain an index to the actual byte array it was deserialized from. The actual deserialization cost will show up when you access the properties which need to be public virtual for that reason. To not distort the measured values I included in the deserialize test also a touch phase to access each deserialized property once and measure that as total deserialization cost. It turns out that the touch deserialize + touch costs are much higher compared to protobuf-net. Personally I do not like ZeroFormatter because it is intrusive to your object design and you would need to design your data structures in a way that the least amount of data is accessed in your use case. But use cases can and will change. Now you need to redesign your object hierarchy every time you have a different access pattern or you need to live with suboptimal performance.

Similar to ZeroFormatter is FlatBuffer (https://google.github.io/flatbuffers/) which comes with its own IDL and compiler to generate the code from a schema. It is basically writing structs via memcopy into a byte array where each object reference is an index to another array which makes it a great candidate if you want to share large datasets between processes via shared memory when you are reading only a fraction of the data. Just as ZeroFormatter the data is deserialized when you actually access it. This is both a curse and a blessing. If you are reading some objects many times you are creating many temporary objects which will hurt GC performance. On the other hand if you are needing only a few items of a large array this lazy deserialization approach is perfect. FlatBuffer does not work with existing objects and it cannot cope with dictionaries which makes it rather inflexible. One needs to know that FlatBuffer comes from game programming where most of the data are coordinates and textures. For that it can be good choice. On the plus side it fully supports versioning despite being pretty low level.

| Serializer | Can Preserve Object References | Observations |

| Message PackSharp |

No | |

| GroBuf | No | |

| FlatBuffer | No | Data structures are created from IDL compiler. |

| Wire | Only On Paper new Serializer( new SerializerOptions(preserveObjectReferences :true) |

The Wire unit tests contain cyclic references. But that seems to work only for cycles on the same object. Real objects with many identical references will still be serialized by value. |

| Jil | No | Cannot serialize Dictionaries with DateTime as Keys. |

| Protobuf_net | Yes, Opt in at declaration level

See StackOverFlow Question |

|

| SimSerializer | By default | |

| ZeroFormatter | No | Serializes only virtual properties. |

| DataContract | Yes new DataContractSerializer(type, new DataContractSerializerSettings { PreserveObjectReferences=true} ) |

|

| Bois | No | |

| JSON.NET | Works Not Always

JsonSerializer.Create( new JsonSerializerSettings { PreserveReferencesHandling = PreserveReferencesHandling.All }); |

Still serializes by value when tried with more complex types like a dictionary. |

| ServiceStack | No | Closes Input Stream See SO Question |

| XmlSerializer | No | |

| MsgPack.Cli | No | |

| BinaryFormatter | Yes | |

| FastJson | No | Cannot round trip DateTime with 100ns resolutions. |

By default all serializers do not track object references which speeds up serialization time significantly at the expense of bigger serialized data.

Which One To Choose?

That is a tricky question because it strongly depends if you can change your existing object model radically or if you have to keep backwards compatibility while switching to a different serializer. Based on experience I would mention protobuf-net as the most feature complete and very fast serializer where you will almost certainly will find no blocking issues. For lesser known serializers you should check the number of commits to the project and if there is recent activity. If the library is no longer actively maintained because the author has shifted focus you should not use it for mission critical applications. If you are bound to a specific data format like JSON Jil is by far the fastest serializer on the planet. But be prepared for unpleasant surprises like that you cannot use Dictionary<DateTime, xxx> with Jil because it throws a NotSupportedException at you.

Despite their claims to track references and object cycles not all serializers fulfill their claims advertised at API level. That is a sign that not all code paths are equally well tested and if your scenario differs from the main usage you are likely to hit unexpected issues.

If you can change everything I would go for the fastest one which is MessagePackSharp because the author has a great track record on creating other serializers and he is very active at his project. GroBuf although equally fast produces significantly larger serialized data which can be an issue if you need to take into account not only the serializer performance but also the data size sent over the wire. A three times larger binary payload can easily defat any performance gain by using a faster serializer if a slow network is in between. To be really sure if your data types work well with the target serializers you can use my test suite, and add your data object/s to the test suite (https://github.com/Alois-xx/SerializerTests) and measure for yourself.

Plugging in a new data type to the test suite is as simple as referencing your assembly which defines your type you care about. Then change the tested data type from BookShelf to your custom type, supply a object factory delegate to create your test data (Data) and optionally add a touch delegate to touch all properties after deserialization to take into account lazy on access deserializing serializers.

private void CreateSerializersToTest() { SerializersToTest = new List<ISerializeDeserializeTester> { new MessagePackSharp<BookShelf>(Data, TouchBookShelf), … };

D:\SerializerTests\bin\Release\net471>SerializerTests -test combined Serializer Objects "Time to serialize in s" "Time to deserialize in s" "Size in bytes" FileVersion Framework MessagePackSharp<BookShelf> 1 0.000 0.000 11 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 1 0.000 0.000 11 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 10 0.000 0.000 93 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 100 0.000 0.000 996 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 500 0.000 0.000 6014 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 1000 0.000 0.000 12515 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 10000 0.001 0.001 138516 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 50000 0.004 0.006 738516 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 100000 0.010 0.013 1557449 1.7.3.4 .NET Framework 4.7.3062.0 MessagePackSharp<BookShelf> 200000 0.016 0.032 3357449 1.7.3.4 .NET Framework 4.7.3062.0

and watch if the numbers are worth the change and that all data ends up in the serialized payload. The serialized data is written to file

to make it easy to check if all data was really written to the output or if your class is missing some [MessagePackObject] or [Index] attributes to make the data show up in the serialized output. If you want to check out two different serializers you can let the test run only for the selected ones with

SerializerTests -Runs 1 -test combined -serializer protobuf,MessagePackSharp

to get your results fast. Now go and fix your serialization performance issues!

[…] Serialization Performance Update With .NET 4.7.2 – Alois Kraus […]

LikeLike

Thx for the time you have put in this. Great help!

LikeLike

[…] binary format for which a great library exist from Yoshifumi Kawai (aka neuecc) who did write the fastest one for C#. With 133 MB the resulting file is the smallest […]

LikeLike

Great overview! It directed me to protobuf-net. But it is not supporting multi-dimensional arrays. But this is a dealbreaker in my case. Maybe this is good to know for others, too. But nevertheless fantastic comparison.

LikeLike

MessagePackSharp seems to me the next best solution at the first glance. But interfaces have to be decorated with the concrete implementation type what is not possible in my case. Another dealbreaker for me. Maybe also good to know for others.

LikeLike

Next issue with MessagePackSharp: It works only for classes with public constructors.

LikeLike

It isn’t super fast and the output is a little large but my serializer does have a few advantages:

* Requires absolutely no decorations or markup of any kind

* Does not require default constructors

* Supports polymorphism

* Supports interfaces

* Supports multi-dimensional arrays

https://github.com/Byrne-Labs/Serializer

https://www.nuget.org/packages/ByrneLabs.Serializer/

LikeLike

Oh, and it also supports references.

LikeLike

As payload size is clearly the winner here. This test done with Bois v2.2

Version 3.0 introduces a more compact format and also boosts the performance and has better support for .Net Core

https://github.com/salarcode/Bois

LikeLike