Paging can cause bad interactive performance. This happens quite often but very little content exists how you can diagnose and fix paging issues. It is time to change that (a bit). I present you here a deep dive into how paging really works for some workloads and how that caused a severe interactive performance issue.

The Observation

It was reported that a software version did significantly worse than its predecessors when the system was under heavy load. Measurements did prove that hard page faults were the issue.

A more detailed analysis showed that the big page out rate of nearly 500MB/s was caused by explicit working set trims. After removing these explicit EmptyWorkingSet calls performance went back to normal. The problem remained though why EmptyWorkingSet was suddenly a problem because these calls were there since a long time.

How Paging Really Works

To make such measurements on Windows 10 I give away some secrets. Some of you might have found the MM-Agent powershell cmdlet already. If you look e.g. at Windows Server 2012 Memory Management improvements then you will find some interesting switches which are also present in Windows 10 Anniversary. You can configure the Windows Memory Management via powershell.

PS C:\WINDOWS\system32> Get-MMAgent ApplicationLaunchPrefetching : True ApplicationPreLaunch : True MaxOperationAPIFiles : 256 MemoryCompression : False OperationAPI : True PageCombining : True PSComputerName :

On Windows 10 Anniversary the switches MemoryCompression and PageCombining are enabled by default. If you are blaming memory compression for bad performance you you can switch off MemoryCompression by calling

Disable-MMAgent -mc

To enable it again you can call

Enable-MMAgent -mc

The MemoryCompression switch seems not to be documented so far. If MemoryCompression is already enabled and you want to turn it off you need to reboot. If you have disabled memory compression successfully you should see in task manager as Compressed value zero all the time. If you enable memory compression the setting will take immediate effect (no reboot necessary).

Now lets perform a little experiment what happens when we trim the working set of two GB process with my CppEater when memory compression is disabled.

C:\>CppEater.exe 2000

Below is the screenshot when CppEater did flush its working set. This caused some memory to be left over in the Modified list because the memory manager did not see the need to completely flush all of the 2 GB out into the page file.

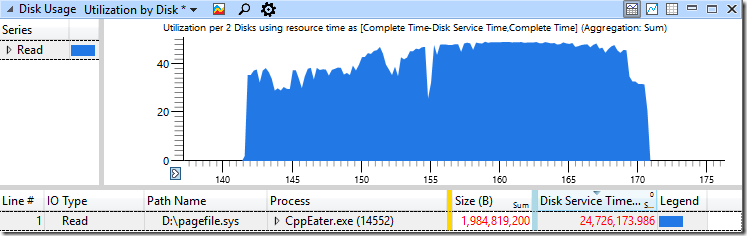

When the memory was flushed you see an IO spike on disk D where my page file resides.

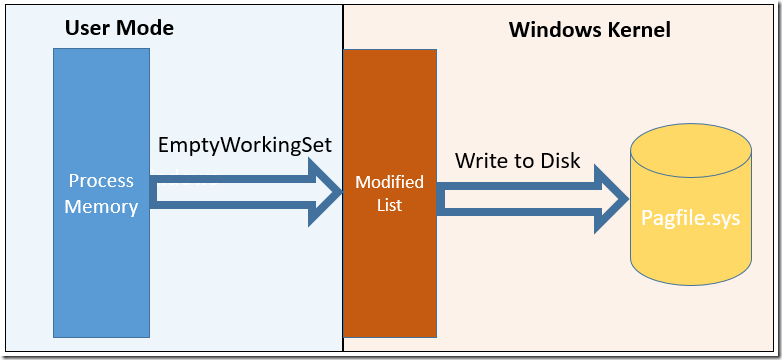

So far so expected. Below is a diagram which shows order of operations happening there.

In ETW traces this looks like this:

- When the memory is flushed we have two GB of data in the modified list.

- Later the OS flushes two GB of data into the page file.

From that picture it is clear that when we then touch the paged out memory again we will need to read two GB of data from the page file. Now lets perform the experiment and see what happens:

Our active memory usage rises again by two GB but what is strange that there is zero disk activity when we access the paged out memory. From our mental model we should see two GB of disk IO on the D drive. Lets have a look at ETW traces while page file writing is happening.

While we write two GB of data to the page file the Standby list increases during that time by the same amount. That means that although the data is persistently written out to the page file we still have all of the written data in the file system cache in the form of the standby list. Since the contents of the page file are still in the file system cache (Standby List) we see no hard page faults when we try to access our paged out data again.

That is a good working hypothesis which we can test now. We only need to access files via buffered IO reads and do not close the file handles in between which will flush the file system cache. Then we should see our CppEater process hitting the hard disk to read its page file contents.

Below is a small application that reads the windows installer cache which is ca. 30 GB in size which is more than enough to flush any existing file system cache contents:

static int Main(string[] args) { var streams = new List<FileStream>(); byte[] buffer = new byte[256 * 1024 * 1024]; foreach (var f in Directory.EnumerateFiles(@"C:\windows\installer", "*.*")) { try { var file = new FileStream(f, FileMode.Open, FileAccess.Read); streams.Add(file); while (file.Read(buffer, 0, buffer.Length) > 0) { } } catch (Exception) { } } return 0; }

With a flushed file system cache CppEater.exe has no secret hiding place for its paged out memory anymore. Now we see the expected two GB of hard disk reads minus the still not flushed out modified memory.

The picture what happens when data is written to the page file is lacking an important detail: It misses out the fact that the Modified list transitions to the Standby list which is just another name for the file system cache.

The Explanation For Bad Performance

Now we have all the missing pieces together. The initial assumption that flushing the working set cause the OS to write the process memory into the page file is correct, but only half of the story. When the page file data is written the memory from the modified list becomes part of the file system cache. When the pages are later accessed again it depends on the current state of the file system cache if we see soft or hard faults with dramatic effects for the observed performance. The bad performing software version did cause more buffered reads than before. That reads did push the cached page file data out of the file system cache. The still happening page faults were no longer cheap soft page faults but hard page faults. That explains the dramatic effects on interactive performance. The added buffered IO reads did surface the misconception that flushing the working set is a cheap operation. Flushing the working set and soft faulting it back again is only cheap if the machine is not under memory pressure. If the memory condition becomes tight or the file system cache gets flushed you will see the real costs of hard page faults. If you still need to access that memory in a fast way the best thing to do is to not flush it. Otherwise you might be seeing random hard page faults even if the machine has still plenty of free memory due to completely unrelated file system activity! This is true for Windows Server 2008 and 2012. With Windows Server 2016 which will also employ memory compression just like Windows 10 things change a bit.

Windows 10 Paging

With memory compression we need to change our picture again. The modified list is not flushed out to disk but compressed and then added to the working set of the Memory Compression process which now acts as cache. Since the page file contents are no longer shared with the standby list we will not see this behavior on Windows 10 or Server 2016 machines with enabled memory compression.

When we execute the same use case under Windows Server 2016 where we flush the file system cache with enabled memory compression we will see

that the memory from the MemCompression process stays cached and it is semi hard faulted back into the CppEater process in 3s which is much faster than the previous 10s when the page faults were hitting the hard disk. It is therefore a good idea for most workloads to keep memory compression enabled. It not only compresses the memory but the cached pagefile contents are no longer subject to standby list pollution which should make the system performance much more predictable than it was before.

Conclusions

Windows has many hidden caches which make slow operations (like hard page faults) fast again. But at the worst point in time these caches are no longer there and you will experience the uncached bad performance. It is interesting that RamMap does not show the page file as biggest standby list consumer on machines with no memory compression enabled. To prevent such hard to find errors in the first place you should measure what things cost with detailed (for me it is ETW) profiling and then act on the measured data. If someone has a great idea to make things faster you should always ask him for the detailed profiling data. Pure timing measurements can be misleading. If a use case has become 30% faster but you use x3 more memory and x2 CPU is this optimization still a great idea?

> the file handles in between which will flush the file system cache

Windows sometimes seems to flush on file close and sometimes not. Did you ever find out the conditions under it does this?

It certainly does not always flush on close because otherwise it would be rare to observe modified pages in e.g. RAMMap.exe. Most apps close their files immediately after writing into them.

LikeLike

The exact logic is not known to me but I remember Mehmet (https://channel9.msdn.com/Blogs/Seth-Juarez/Memory-Compression-in-Windows-10-RTM) talking about things like modified page creation rate which will influence the timepoint when the modified pages are written to disk. That could explain why you have sometimes different behavior although you are doing the same thing.

LikeLike

[…] written to the page file. On Server 2016 you could enable memory compression also if you wish (see https://aloiskraus.wordpress.com/2016/10/09/how-buffered-io-can-ruin-performance/). On Windows 10 machines on the other hand the memory is compressed by default and kept in memory […]

LikeLike

How about just disable the Superfetch service.

LikeLike

Could you elaborate how that would help in the described scenario? When you call EmptyWorkingSet only your modified bytes are put to the page file. The read only pages from dlls will remain in the file system cache until the are flushed out by other data. Superfetch would start paging in dlls later when it detects that important dlls were flushed out. This usually happens only after a fresh boot and rarely later depending on undocumented heuristics.

LikeLike

As I commented in another post of yours, what is the situation when you have copious RAM? Is there any advantage to memory compression when you never run out of free RAM, let alone available RAM?

LikeLike

Hi Sridhar, memory compression is on Windows Server disabled by default. Servers have copious RAM. The rationale behind this is if you have large applications which would cause page outs you are normally running on a many core server but memory compression is single threaded so it would not scale when many applications would page out memory. If memory compression would become multi threaded then it could itself become a bottleneck. The used compression Algo has (depending on CPU) a compression rate of ca. 200-300 MB/s and up to 500 MB decompression rate. That is en par with todays SSDs. If you have a storage connection with PCI Express SSDs which can transfer GB/s then single threaded compression will simply loose out. Memory compression is a good solution only for machines with spinning disks otherwise it can have adverse effects to e.g. CPU cache locality when a large spike of CPU ruins your L3 Cache hit rate. Things stay complex in todays world.

LikeLike