See Updates

When looking at performance issues with ETW I did find long deserialization times in conjunction with BinaryFormatter. A deeper look revealed that the issue is easy to reproduce if the object graph gets bigger (>100K objects). Since BinaryFormatter is in business since over 10 years and I have never heard of significant performance issues except that it is slow in general I was quite surprised that such a blatant problem still exists in .NET. But since .NET is open sourced at github it is easy to complain: https://github.com/dotnet/corefx/issues/16991. This has catched the interest of Stephen Toub himself and they added a small but impactful change to BinaryFormatter:

The problem during deserialization with BinaryFormatter is that it uses the MaxArraySize value to bucket an ObjectHolder array like a hash table.

ObjectHolder objects[MaxArraySize=0x1000=4096] class ObjectHolder { internal ObjectHolder m_next; }

If we need to store one million objects into one ObjectHolder array with a length of 4096 we need to create 4096 ObjectHolder linked lists via the ObjectHolder.m_next field with a depth of 244 nodes. When you try to access a specific object in that pretty large linked list you will need to touch a large number of linked nodes during deserialization. By increasing the value to 1 048 576 ( = 0x100000 ) we will be able to use the array as a real hash table where only a few collision’s will make it necessary to look up the next item in the linked list.

The next .NET Core version will have a band aid on it so that the issue will appear only with even bigger object graphs. With the current BinaryFormatter you you will get a nice parabola where the serialization times for one million objects was only 2s but the deserialization time is in the order of 80s!

Update 5/2018

.NET 4.7.2 contains the fix of .NET Core 2.0 as well now. You need to enable the changes with an AppCompat switch in your App.config

<?xml version="1.0" encoding="utf-8"?> <configuration> <runtime> <!-- Use this switch to make BinaryFormatter fast with large object graphs starting with .NET 4.7.2 --> <AppContextSwitchOverrides value="Switch.System.Runtime.Serialization.UseNewMaxArraySize=true" /> </runtime> </configuration>

After the fix in .NET Core you can serialize object graphs up to ca. 13 million objects before you hit the next limitation of BinaryFormatter:

Unhandled Exception: System.Runtime.Serialization.SerializationException: Exception of type ‘System.Runtime.Serialization.SerializationException’ was thrown.

at System.Runtime.Serialization.ObjectIDGenerator.Rehash() in D:\Source\vc17\NetCoreApp\ConsoleApp1\ConsoleApp2\Serialization\ObjectIDGenerator.cs:line 140

This time BinaryFormatter is running out of prime numbers for another hash table. If we try to serialize objects graphs with more than 2*6 584 983 objects we are out of luck again because the ObjectIDGenerator did never expect us to serialize more than 2*6584983 objects.

public class ObjectIDGenerator { // Table of prime numbers to use as hash table sizes. Each entry is the // smallest prime number larger than twice the previous entry. private static readonly int[] s_sizes = { 5, 11, 29, 47, 97, 197, 397, 797, 1597, 3203, 6421, 12853, 25717, 51437, 102877, 205759, 411527, 823117, 1646237, 3292489, 6584983 };

Update 5/2018: Fixed with .NET Core 2.1 https://github.com/dotnet/corefx/issues/24902#issuecomment-377331210 and will arrive hopefully with .NET 4.7.3.

But hey that is ok. No one did ever that successfully until now. Since there are not many (http://stackoverflow.com/questions/569127/serializationexception-when-serializing-lots-of-objects-in-net) complaints about that one I guess everyone has simply moved on and switched to a faster serializer. Besides that I wonder if the guys did ever profile their application why deserialization was taking ca. 45 minutes for a ca. ~ 300MB file.

What Types of Serializers Exist?

When you want to switch away from BinaryFormatter you first need to check how your data is structured. If your data can contain cyclic references then you have less options because most serializers are by default tree serializers which cannot cope with object graphs. Another downside might be that your target serializer cannot serialize private fields which BinaryFormatter is capable to do. You also need to be able to change the data object and base classes to add the necessary attributes, ctors to make it work with other serializers. Only BinaryFormatter serializes pretty much everything as long as the class has [Serializable] put on it. And last but not least it should support streams to read and write data from to it. JSON strings are a nice and efficient data storage format for small messages but reading a 200MB JSON file into a single string because the serializer is not supporting streams is creating a lot of work for the garbage collector. And you can start deserializing the data only when the complete file has been read. FastJSON is the only serializer which does not support streams which makes it not a good choice for larger messages.

Below is a collection of widely used serializers and their most important feature properties summed up in a table:

| Serializer | Type | Data Format | Private Members | Stream Support | .NET Core Support | Default Ctor Needed To Deserialize | Explicit Object Graph Support |

| BinaryFormatter | Graph | Binary | Yes | Yes | Yes (NetStandard 1.6) | No | Enabled by Default. |

| XmlSerializer | Tree | Xml | Yes | Yes | Yes | Yes public | No |

| DataContractSerializer | Tree | Xml | Yes | Yes | Yes | No |

new DataContractSerializer(typeof(TypeToSerialize), new DataContractSerializerSettings { PreserveObjectReferences = true, }); |

| Jil | Tree | JSON | No | Yes | Yes | Yes public | No |

| FastJSON | Graph | JSON | No | No | No | Yes public | Enabled by Default. |

| Protobuf-Net | Tree | Binary Google Protocol Buffer |

Yes | Yes | Yes | Yes* | Declarative at ProtoMember level

// * no default ctor needed if SkipConstructor=true // Thanks Marc for that hint [ProtoContract(SkipConstructor=true)] class DataForProtobuf { [ProtoMember(1, AsReference = true)] DataForProtobuf Parent; } |

| JSON.NET | Tree | JSON | Yes | Yes | Yes | No | |

| NFX.SlimSerializer | Graph | Binary | Yes | Yes | No | No | |

| Wire** | Tree | Binary | Yes | Yes | Yes | No | new Serializer(new SerializerOptions(preserveObjectReferences:true)) Crashes with StackoverflowException if cycles are present and preserveObjectReferences is not set! |

| MsgPack.Cli*** | Graph | Binary | Yes | Yes | Yes | ||

| MessagePackSharp*** | Graph | Binary | Yes | Yes | Yes | ||

| GroBuf*** | Tree | Binary | Yes | Yes | Yes | ||

| FlatBuffer*** | Tree | Binary | Yes | Yes | Yes | ||

| ZeroFormatter*** | Graph | Binary | Yes | Yes | Yes | ||

| Bois*** | Graph | Binary | Yes | Yes | Yes | ||

| ServiceStack.Text*** | Graph | Json | Yes | Yes | Yes |

**Update1: Added Wire and MsgPack on request.

***Update 5/2018 Added MessagePackSharp, MessagePack.Cli, GroBuf, FlatBuffer, ZeroFormatter, Bois, ServiceStack. Removed MsgPack which is not maintained since 2011 and was slow anyway.

Due to the lazy nature of ZeroFormatter and FlatBuffer the deserialized object properties are touched once to get a fair comparison.

With the table above you can better judge which serializers could work for your scenario. Pretty much any serializer exchange will result in breaking changes to your serialized data format. The cost of a switch needs therefore be justified and you need either a way to migrate the data or you stay polymorphic by keeping your data objects and add the necessary support for the other serializer in place which gives you the ability to switch back to the old one for data migration. If you switch e.g. from XmlSerializer to DataContractSerializer both can write Xml as output but DataContractSerializer writes the serialized data never into Xml attributes which XmlSerializer pretty often does. That makes it impossible to switch over from either one without breaking the data exchange format.

Besides the used data format, readability, and feature set the only real metric why one would want to switch over to another serializer is performance. There are many performance guides out there which measure one aspect of serializers but all of them I have found so far ignore important details during the measuring process. The following numbers are therefore the “real” ones measured on my machine with representative samples and many runs to average out random noise from the OS. There is one pretty good serialization testing framework out there which I have found after I have finished my analysis http://aumcode.github.io/serbench/ which was written by some smart guy who did write Pile ( https://www.infoq.com/articles/Big-Memory-Part-2). The claim there is that they use the fastest homegrown serializers to stuff objects into big byte arrays (pile) so they can support many GB large heaps (10s of GB inside one process) while keeping very small GC latencies because the objects are only deserialized on access and live only a short time which makes them Gen0 garbage quite fast. The web site contains many graphs http://aumcode.github.io/serbench/Specimens_Typical_Person/web/overview-charts.htm) which do not really make it clear how you can choose your best serializer. Personally I have found the way how the data is presented confusing. Compared to serbench my own tester is simpler to play with if you want to plug in your own serializer because you can directly edit the code from one self contained executable. My tester (https://github.com/Alois-xx/SerializerTests) also warns you if you are measuring debug builds or you are using a not NGenned baseline which for first call effects does not measure the actual serializer overhead but the amount of JITed code executed. Startup performance testing is complex and I believe my tester does the best job there.

Tested Versions

| Serializer | File Version |

| BinaryFormatter | 4.7.3062.0 built by: NET472REL1 |

| DataContract | 4.7.3062.0 built by: NET472REL1 |

| XmlSerializer | 4.7.3062.0 built by: NET472REL1 |

| FastJson | 2.1.28.0 |

| Jil | 2.15.4 |

| JsonNet | 11.0.2.21924 |

| Protobuf_net | 2.3.7.0 |

| SlimSerializer | 3.0.0.1 |

| Wire | 1.0.0.0 |

| MsgPack_Cli | 0.9.144 |

| FlatBuffer | 1.0.0.0 |

| GroBuf | 1.3.0.0 |

| ZeroFormatter | 1.6.4.0 |

| MessagePackSharp | 1.7.3.4 |

| Bois | 2.2.1.0 |

| ServiceStack | 5.0.2.0 |

Serializer Performance

Here are the results of my own testing with various serializers. The numbers below were created with https://github.com/Alois-xx/SerializerTests which is my own serialization testing framework to get the numbers. The graph shows on the left axis the average time for the de/serialize operation per serializer. The tests were performed with one up to 1 million Book objects where the average throughput was used. Since each serializer has a slightly different output format the data size to process changes a lot. The serialized data size is printed on the right axis where the size is shown from top to bottom to achieve better readability. The absolute performance is therefore a function of the efficiency of the used data format and the per object overhead to read/write the data. First time init effects were factored out since this is an extra metric we will shortly discuss. The numbers below are real throughput numbers for larger message packets, although the ordering which serializer performs best remains practically constant if 300K or 1000 objects are de/serialized. For smaller objects numbers the GC effects dominate the picture which makes it harder to get reliable numbers because you get results which depend on the GC state of the previous test which is not what I wanted to show here. I did sort by deserialization time because usually you are reading data from some storage which is most of the time the more important metric.

All tests were performed on my Intel I7-4770K 3.5GHz on Windows 10 with .NET 4.7.2 x64 and .NET Core 2.0.6. The shown numbers are the sum of .NET Core and .NET Framework.

What is interesting that the not widely known Jil JSON serializer is able to serialize JSON data by far the fastest which is pretty close to protocol buffers although the serialized data size is 90% bigger. In general the serialization times are always faster than the deserialization times. If this is not the case the serializer is either deeply flawed or you have measured things wrong. From a high level perspective these operations must always happen:

Serialization

- Existing objects are traversed

- Data is extracted and converted into the serialization format

- The conversion process can involve some intermediary allocations

- Data is written into a stream which is usually written to disk or the network

Deserialization

- Data is read from the stream

- Data is split into sub tokens (involves memory allocations)

- New objects are allocated and filled with tokenized data from input stream

If you serialize a 1 GB in memory object into 200 MB on disk you are appending data to a stream which is flushed out to disk. But if you read 200MB from disk to deserialize you need to allocate 1GB of memory just to hold the objects in memory. While deserializing you are effectively GC bound because most of the time your thread is suspended by the GC which checks if some of your newly allocated objects are no longer needed. It is therefore not unusual to have high GC blocking times during deserialization but much less GC activity while serializing data to disk. That is also the reason why there is little point in making efficient deserialization multi threaded because you are now allocating large amounts of memory from multiple threads just to discover that the GC will block all of your threads for now much more often occurring GCs. But back to the serializer performance.

Size Does Matter

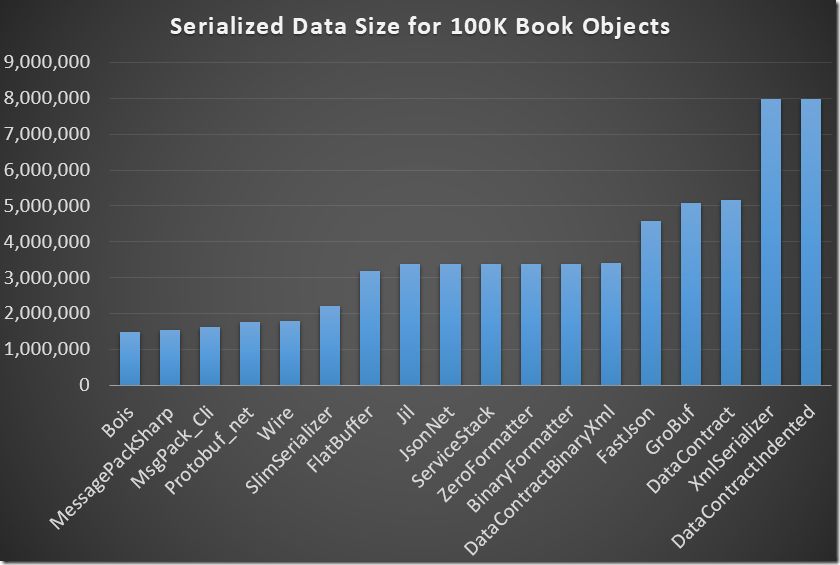

To fully understand the performance of an serializer one must also take into account the size of the serialized data. Below is the size of the serialized file for 100K Book objects shown:

Graph Updated 5/2108

The object definition was one Bookshelf to which N Books were added.

[Serializable, DataContract, ProtoContract] public class BookShelf { [DataMember, ProtoMember(1)] public List<Book> Books { get; set; } [DataMember, ProtoMember(2)] private string Secret; public BookShelf(string secret) { Secret = secret; } public BookShelf() { } } [Serializable,DataContract,ProtoContract] public class Book { [DataMember, ProtoMember(1)] public string Title; [DataMember, ProtoMember(2)] public int Id; }Jil and JSON.NET are nearly equal but FastJSON is ca. 35% larger than the two others. Lets check out the serialized data for a Bookshelf with one Book inside it:

Json.NET

{“Secret”:”private member value”,”Books”:[{“Title”:”Book 1″,”Id”:1}]}

Jil

{“Books”:[{“Id”:1,”Title”:”Book 1″}]}

FastJSON

{“$types”:{“SerializerTests.TypesToSerialize.BookShelf, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null”:”1″,”SerializerTests.TypesToSerialize.Book, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null”:”2″},”$type”:”1″,”Books”:[{“$type”:”2″,”Title”:”Book 1″,”Id”:1}]}

By comparing the output we find that Jil omits private members which is the reason why the Secret field value of BookShelf is missing. Besides that Jil and Json.NET have equal output. FastJSON emits for every title string an additional $type node which explains the bigger JSON output. It is educational to look at the other serialized data as well:

- XmlSerializer

<?xml version=”1.0″?>

<BookShelf xmlns:xsd=”http://www.w3.org/2001/XMLSchema” xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance”>

<Books>

<Book>

<Title>Book 1</Title>

<Id>1</Id>

</Book>

</Books>

</BookShelf>

Data Contract Indented

<?xml version=”1.0″ encoding=”utf-8″?>

<BookShelf xmlns:i=”http://www.w3.org/2001/XMLSchema-instance” xmlns=”http://schemas.datacontract.org/2004/07/SerializerTests.TypesToSerialize”>

<Books>

<Book>

<Id>1</Id>

<Title>Book 1</Title>

</Book>

</Books>

<Secret>private member value</Secret>

</BookShelf>

DataContract

<BookShelf xmlns=”http://schemas.datacontract.org/2004/07/SerializerTests.TypesToSerialize” xmlns:i=”http://www.w3.org/2001/XMLSchema-instance”><Books><Book><Id>1</Id><Title>Book 1</Title></Book></Books><Secret>private member value</Secret></BookShelf>

DataContract XmlBinaryDictionaryWriter

@ BookShelfHhttp://schemas.datacontract.org/2004/07/SerializerTests.TypesToSerialize i)http://www.w3.org/2001/XMLSchema-instance@Books@Book@Idƒ@Title™Book 1@Secret™private member value

BinaryFormatter

ÿÿÿÿ FSerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null *SerializerTests.TypesToSerialize.BookShelf <Books>k__BackingFieldSecret’System.Collections.Generic.List`1[[SerializerTests.TypesToSerialize.Book, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null]] private member value ’System.Collections.Generic.List`1[[SerializerTests.TypesToSerialize.Book, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null]] _items_size_version ‘SerializerTests.TypesToSerialize.Book[] %SerializerTests.TypesToSerialize.Book

%SerializerTests.TypesToSerialize.Book TitleId Book 1

Protobuf

Book 1private member value

SlimSerializer

Êþâæ¡

ÿäSerializerTests.TypesToSerialize.BookShelf, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null

ÿ¾System.Collections.Generic.List`1[[SerializerTests.TypesToSerialize.Book, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null]], mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089ÿ(private member value ÿæSerializerTests.TypesToSerialize.Book[], SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null|0~3 ÿ $6|0~3ÿÚSerializerTests.TypesToSerialize.Book, SerializerTests, Version=1.0.0.0, Culture=neutral, PublicKeyToken=null ÿ Book 1

MsgPack

‚¥Books„¦_items”‚¥Title¦Book 1¢IdÀÀÀ¥_size¨_version©_syncRootÀ¦Secret´private member value

Wire

ÿ; SerializerTests.TypesToSerialize.BookShelf, SerializerTestsÿl System.Collections.Generic.List`1[[SerializerTests.TypesToSerialize.Book, SerializerTests]], mscorlib,%core% ÿ6 SerializerTests.TypesToSerialize.Book, SerializerTests Book 1private member value

From that we see that Protocol Buffers and Wire have the smallest serialized size. For our serialized Bookshelf Wire looks less efficient but that is only the header which is so big. The serialized data is as small as the one of protocol buffers. Now you can understand the performance differences of DataContractSerializer depending on the used output format (see Data Contract Indented, DataContract and DataContract XmlBinaryDictionaryWriter). It also depends on the serialized data size if you become a factor 2 faster or not. Small is beautiful. Protocol buffers deliver that with impressive numbers. SlimSerializer is pretty close to protocol buffers and can serialize pretty much anything with no extra attributes. Although it seems not to be able to serialize delegates. You should check if this one could work for you. Since it is not so widely used and lacks versioning support you should do thorough testing before choosing another serializer. Performance is one important aspect but correctness always beats performance.

Serializer Init Times

So which serializer is the fastest? As usual it depends on your requirements. Are you trying to load a configuration file faster during your application startup? In that case not only the throughput performance but first time init effects may matter more than the actual serializer performance. Lets try things out by serializing one object only and then quit.

Suddenly one of the fastest serializers is by a large margin the slowest one. To fully understand the serialization performance you need to take into account the serializer startup costs and the achieved throughput. If application startup is your main concern and you are loading only a few settings from a file you should think twice if Jil is really the right serializer for you. Unless if you change the environment a bit and you get a completely different chart:

This time Jil has become 240ms faster with no changes except that the test executable was NGenned with

%windir%\Microsoft.NET\Framework64\v4.0.30319\ngen.exe install SerializerTests.exe

That did precompile the executable and all referenced assemblies including Jil and Sigil which seem to have a lot of code running during the serializer initialization. If you are running on .NET Core you will find that the startup costs are much higher because nearly no dll is precompiled with crossgen.exe which is the .NET Core NGen pendant. Serializer startup costs are therefore dominated by JIT costs which can be minimized by precompiling your assembly which is pretty important if you do not only have great throughput but also good startup times. If you are deploying a not precompiled application you need to be aware of the greatly different startup costs. Taking only the serializer with the biggest throughput may be not the best idea.

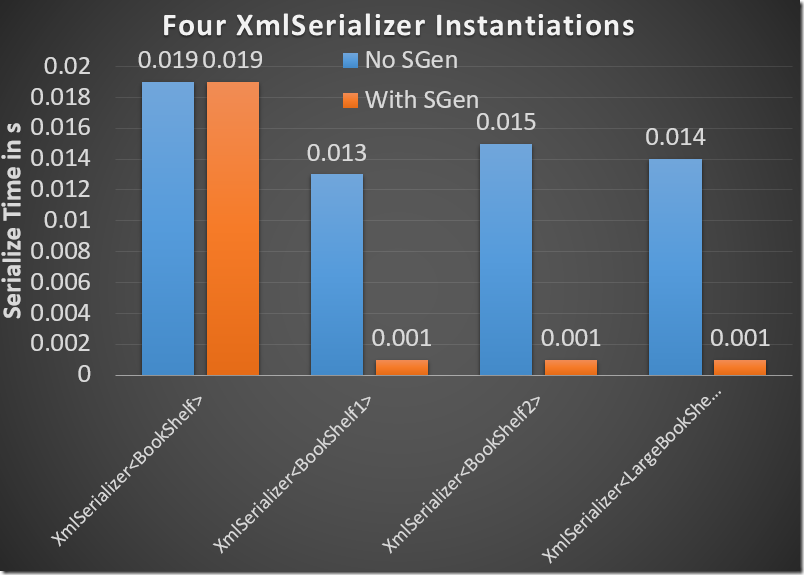

XmlSerializer Startup Time

In the regular .NET Framework there is a special catch with XmlSerializer. If you instantiate XmlSerializer it will cost for the first type ca. 70ms but later invocations cost only ca. 14ms. Why is the first invocation of XmlSerializer so costly? As it turns out XmlSerializer creates a serialization assembly on the fly if it finds not a pregenerated one with the sgen tool (part of the .NET Framework SDK). In order to load it XmlSerializer will try to do an Assembly.Load(“YourAssembly.XmlSerializers, PublicKeyToken=xxxx, Version=…..“) which will fail with a FileNotFoundException if no pregenerated assembly exists. This assembly load attempt will trigger a GAC lookup which will call a method CheckMSIInstallAvailable:

System.Xml.ni.dll!System.Xml.Serialization.XmlSerializer..ctor(System.Type, System.String)$##6001E85

System.Xml.ni.dll!System.Xml.Serialization.TempAssembly.LoadGeneratedAssembly(System.Type, System.String, System.Xml.Serialization.XmlSerializerImplementation ByRef)$##60016C8

mscorlib.ni.dll!System.Reflection.Assembly.Load(System.Reflection.AssemblyName)$##60040EC

mscorlib.ni.dll!System.Reflection.RuntimeAssembly.InternalLoadAssemblyName(System.Reflection.AssemblyName, System.Security.Policy.Evidence, System.Reflection.RuntimeAssembly, System.Threading.StackCrawlMark ByRef, IntPtr, Boolean, Boolean, Boolean)$##600415D

clr.dll!AssemblyNative::Load

clr.dll!AssemblySpec::LoadAssembly

clr.dll!AssemblySpec::LoadDomainAssembly

clr.dll!AppDomain::BindAssemblySpec

clr.dll!AssemblySpec::FindAssemblyFile

clr.dll!AssemblySpec::LoadAssembly

clr.dll!FusionBind::RemoteLoad

clr.dll!CAssemblyName::BindToObject

clr.dll!CAssemblyDownload::KickOffDownload

clr.dll!CAssemblyDownload::DownloadComplete

clr.dll!CAssemblyDownload::DownloadNextCodebase

clr.dll!CAsmDownloadMgr::ProbeFailed

clr.dll!CheckMSIInstallAvailable

clr.dll!MSIProvideAssemblyPeek

clr.dll!MSIProvideAssemblyGlobalPeek

clr.dll!MSIProvideAssemblyPeekEnumThat code is not part of .NET Core and also not of the SSCLI implementation. But with profiling it is not too hard to figure out what is really happening. By using ETW with Registry tracing we can see that the first failed assembly load failure is the most costly one:

Internally CheckMSIInstallAvailable will read all registry values below HKEY_LOCAL_MACHINE\SOFTWARE\Classes\Installer\Assemblies\Global which by pure coincidence contains all registered assemblies from the GAC:

That minor implementation detail causes the noticed 44ms delay because CheckMSIInstallAvailable will first cache the GAC contents from the registry which needs 44ms on first access. It is not correct to attribute the time of the failed assembly load attempt to the startup costs of XmlSerializer because it happens only once for the first assembly load failure. So what is the correct XmlSerializer startup cost? If you have many different XmlSerializer instances during application startup only the first one will pay the high 70ms startup costs. All subsequent instantiation’s will cost around 15ms per type which is much cheaper than one would expect by a single measurement. By pregenerating the code with sgen one can reduce the startup costs even further to ca. 1ms per type but the first assembly load will still cost around 19ms even when it is successful.

Before .NET 4.5 XmlSerializer did also spawn csc.exe to compile the code on the fly which is luckily no longer the case. During these “old” days XmlSerializer was costing up to 200ms startup costs per type. The usage of sgen was therefore absolutely necessary but in todays fast moving world old performance truth no longer hold true. Startup costs are non trivial to measure so beware.

Multi Targeting .NET Executables and Precompiling .NET Core Assemblies

Precompiling binaries with .NET Core is not very straightforward yet and I think things will change quite a bit in the future. But there is the approach that I have found to work. You can create an executable which targets desktop .NET and .NET Core inside one .csproj file with VS 2017 and later. A typical .csproj which targets .NET 4.5.2 and .NET Core 1.1 contains by semicolon separated the <TargetFrameworks> and for .NET Core the <RuntimeIdentifiers> which are downloaded when the nuget packages are restored which contains the platform dependent .NET Core dlls. When you compile this binary it is compiled two times. Once as regular .NET Desktop exe and another time as .NET Core dll which can be executed in the bin folder with

dotnet xxxx.dlll

<Project Sdk="Microsoft.NET.Sdk"> <PropertyGroup> <OutputType>Exe</OutputType> <TargetFrameworks>netcoreapp1.1;net452</TargetFrameworks> <RuntimeIdentifiers>win7-x64</RuntimeIdentifiers> </PropertyGroup> <ItemGroup> <PackageReference Include="Jil" version="2.15.0" /> <PackageReference Include="protobuf-net" Version="2.1.0" /> <PackageReference Include="Sigil" version="[4.7.0.0]" /> <PackageReference Include="System.Xml.XmlSerializer" version="*" /> <PackageReference Include="System.Runtime.Serialization.Xml" version="*" /> </ItemGroup> </Project>

The binaries are put into a target framework dependent folder

If you download .NET Core it will only contain one precompiled binary. To precompile everything you need to take the dlls of

C:\Program Files\dotnet\shared\Microsoft.NETCore.App\1.1.1

to your application binary folder and then call

%USERPROFILE%\.nuget\packages\runtime.win7-x64.microsoft.netcore.runtime.coreclr\1.1.1\tools\crossgen.exe /JITPath “C:\Program Files\dotnet\shared\Microsoft.NETCore.App\1.1.1\clrjit.dll” /Platform_Assemblies_Paths “C:\Program Files\dotnet\shared\Microsoft.NETCore.App\1.1.1“;%USERPROFILE%\.nuget\packages\System.Xml.XmlSerializer\4.3.0\lib\netstandard1.3

where you need to append the path of the referenced Nuget packages to make everything work. If things are not working correctly you can enable “Fusion” logging by setting the environment variable

COREHOST_TRACE=1

That and Windbg of course will give you more hints. Precompiling things in .NET Core is still a lot of try and error until everything works and I am not sure if this is the currently recommended way.

Conclusions

Measuring and understanding the performance aspects of serializers is quite complex. For some reason the measured numbers by the library authors of public serializers seem to prove that their serializer is the fastest one. Since I have no affiliations with any of the library maintainers the presented tests should be the most neutral one while I was trying hard to make no obvious errors in my testing methodology. If you want to migrate from an existing type hierarchy Protocol buffers and SlimSerializer look like a fast replacement to BinaryFormatter. Jil is great if you serialize the public API surface of your data objects and you do not need to serialize private fields or properties. Despite its claims FastJSON turned out in no metric to be leading in these tests. If I have made an error there please drop me a note and I will correct the data. BinaryFormatter is has a hideous O(n^2) deserialize time complexity which no one seems to have written about in public yet. At least with .NET Core things will become better. If you are deserializing larger object graphs you know now why the deserialization time takes up to 40 minutes. Before trying out a new fastest serializer be sure to measure by yourself and do not choose serializers which have fast in their name. There is a Fastest.Json serializer on Nuget which crashes the .NET Execution engine during serialization and the library author did never bother to implement the deserialize part. That’s all for today.

[…] by /u/CWagner [link] […]

LikeLike

Hi! I’m the protobuf-net author – great post, but just to clarify: protobuf-net *does not* require a constructor – you just need to *tell* it to skip it – and it has support for “graph” serialization, but it *defaults* to tree, because that is how protobuf *usually* works. I try to be flexible 🙂

LikeLike

Hi Marc, I have updated the table to reflect the not needed default ctor.

For the type serializer classification I have named the column intentionally Default Serializer Type and I have added the column Explicit Graph support to reflect that some (if not most) serializers are hybrids. That is already part of the original table. Great work on Protobuf.

LikeLike

You missed Manatee.Json…

LikeLike

Hi Greg, I have done a brief test. Your serializer would not look very good in these tests because you do not support streams which would cause a lot of copying around. But there is an even bigger O(N) problem during serialization with the Book object in your ReferencingSerializer which calls

if (serializer.SerializationMap.Contains(obj))

which calls into your Dictionary with an O(n) dependency:

internal class SerializationPairCache : Dictionary

{

public bool Contains(object obj)

{

return Values.Any(v => ReferenceEquals(v.Object, obj));

}

Serializer Objects “Time to serialize in s”

ManateeJson 1 0.000 16 6.0.0

ManateeJson 1 0.000 16 6.0.0

ManateeJson 10 0.000 61 6.0.0

ManateeJson 100 0.000 511 6.0.0

ManateeJson 500 0.002 2511 6.0.0

ManateeJson 1000 0.007 5011 6.0.0

ManateeJson 10000 0.584 50011 6.0.0

ManateeJson 50000 15.342 250011 6.0.0

ManateeJson 100000 66.184 500011 6.0.0

ManateeJson 200000 269.091 1000011 6.0.0

I like the object model and things like that but I think that this library needs some heavy tuning and stream support to efficiently support bigger object graphs.

LikeLike

Thanks. It’s always nice to have constructive input.

LikeLike

Technically, it does support streams, but it’s during the parse, not the serialization.

LikeLike

Yes I have noticed that. Thats what my test class was:

class ManateeJson : TestBase where T : class

{

public ManateeJson(Func testData)

{

base.CreateNTestData = testData;

FormatterFactory = () =>

{

var lret = new JsonSerializer();

lret.Options.AutoSerializeFields = true;

return lret;

};

}

protected override void Serialize(T obj, Stream stream)

{

JsonValue jsonObj = Formatter.Serialize(obj);

var strBytes = Encoding.UTF8.GetBytes(jsonObj.ToString());

stream.Write(strBytes, 0, strBytes.Length);

}

protected override T Deserialize(Stream stream)

{

var reader = new StreamReader(stream);

JsonValue val = JsonValue.Parse(reader);

return Formatter.Deserialize(val);

}

}

That involves always intermediary objects which cost extra allocations and hence GC overhead. A more direct Deserialize/Serialize method without the need to allocate extra strings would be great. So far I have only tested the serialize part. During that the need to put everything into a large string which can fragment the Large Object Heap and then to convert it into an UTF-8 byte array is not the most performant way. Streaming can help a lot to keep the intermediate objects small.

LikeLike

I see what you’re saying about heap fragmentation, but I would argue that each JsonValue in the overall structure is small (unlike a string which would a large continuous lump of data). This is easier for the GC to handle. Even serialization directly from a stream would have intermediates that the GC must collect.

LikeLike

Hi,

What about MsgPack and Wire?

LikeLike

I have added Wire and MsgPack to my test suite and upated the serialization graph and serialization times graphs accordingly. Wire looks really fast although it seems to have some problems with interface serialization which are not completely solved yet. Wire is for the Bookshelf object ca. 10% faster than protocol buffers which is really impressive. But it has some rough edges.

MsgPack has pretty good serialization times but not so good deserialization times which are in my experience more important. Normally you create from some input data as a result of some preprocessing some output which is later read on demand which should be as fast as possible. The only exception is network transfer where you have to put equal emphasis on serialization and deserialization performance.

LikeLike

Have you tried MsgPack-CLI? Try this implementation: https://github.com/neuecc/MessagePack-CSharp/ It shows better results than Wire and Protobuf-net

LikeLike

Hi Alex,

I have tried it and it shows very good perf but it seems not to be production quality ready yet. I get an InvalidOperationException when trying to create a serializer for SerializerTests.TypesToSerialize.LargeBook with many private fields.

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackCode(System.Type type, System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Func targetMemberSelector, System.Func memberNameFormatter, System.Func lookupPackMethod)MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker(System.Type t, System.Reflection.Emit.DynamicMethod dm)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.LookupPackMethod(System.Type t)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackMemberValueCode(System.Type type, System.Reflection.Emit.ILGenerator il, MsgPack.Compiler.Variable var_writer, MsgPack.Compiler.Variable var_obj, System.Reflection.MemberInfo m, MsgPack.Compiler.Variable elementIdx, System.Type currentType, System.Reflection.MethodInfo currentMethod, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackCode(System.Type type, System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Func targetMemberSelector, System.Func memberNameFormatter, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker(System.Type t, System.Reflection.Emit.DynamicMethod dm)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.LookupPackMethod(System.Type t)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackMemberValueCode(System.Type type, System.Reflection.Emit.ILGenerator il, MsgPack.Compiler.Variable var_writer, MsgPack.Compiler.Variable var_obj, System.Reflection.MemberInfo m, MsgPack.Compiler.Variable elementIdx, System.Type currentType, System.Reflection.MethodInfo currentMethod, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackCode(System.Type type, System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Func targetMemberSelector, System.Func memberNameFormatter, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker(System.Type t, System.Reflection.Emit.DynamicMethod dm)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.LookupPackMethod(System.Type t)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackMemberValueCode(System.Type type, System.Reflection.Emit.ILGenerator il, MsgPack.Compiler.Variable var_writer, MsgPack.Compiler.Variable var_obj, System.Reflection.MemberInfo m, MsgPack.Compiler.Variable elementIdx, System.Type currentType, System.Reflection.MethodInfo currentMethod, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackArrayCode(System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Type t, MsgPack.Compiler.Variable var_writer, MsgPack.Compiler.Variable var_obj, MsgPack.Compiler.Variable var_loop, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackCode(System.Type type, System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Func targetMemberSelector, System.Func memberNameFormatter, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker(System.Type t, System.Reflection.Emit.DynamicMethod dm)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.LookupPackMethod(System.Type t)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackMemberValueCode(System.Type type, System.Reflection.Emit.ILGenerator il, MsgPack.Compiler.Variable var_writer, MsgPack.Compiler.Variable var_obj, System.Reflection.MemberInfo m, MsgPack.Compiler.Variable elementIdx, System.Type currentType, System.Reflection.MethodInfo currentMethod, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackCode(System.Type type, System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Func targetMemberSelector, System.Func memberNameFormatter, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker(System.Type t, System.Reflection.Emit.DynamicMethod dm)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.LookupPackMethod(System.Type t)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackMemberValueCode(System.Type type, System.Reflection.Emit.ILGenerator il, MsgPack.Compiler.Variable var_writer, MsgPack.Compiler.Variable var_obj, System.Reflection.MemberInfo m, MsgPack.Compiler.Variable elementIdx, System.Type currentType, System.Reflection.MethodInfo currentMethod, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.Compiler.PackILGenerator.EmitPackCode(System.Type type, System.Reflection.MethodInfo mi, System.Reflection.Emit.ILGenerator il, System.Func targetMemberSelector, System.Func memberNameFormatter, System.Func lookupPackMethod)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker(System.Type t, System.Reflection.Emit.DynamicMethod dm)

MsgPack.dll!MsgPack.CompiledPacker.DynamicMethodPacker.CreatePacker_Internal()

MsgPack.dll!MsgPack.CompiledPacker.PackerBase.CreatePacker()

MsgPack.dll!MsgPack.CompiledPacker.Pack(System.IO.Stream strm, SerializerTests.TypesToSerialize.LargeBookShelf o)

SerializerTests.exe!SerializerTests.Serializers.MsgPack.Serialize(SerializerTests.TypesToSerialize.LargeBookShelf obj, System.IO.Stream stream) Line 21 C#

LikeLike

Alois,

hi, thanks for great work, for SlimSerializer the payload may be shrunk by passing KnownTypes and using BtachMode – this can improve performance 20-40%

LikeLike

[…] Un guide sur la performance des différents procédés de sérialisation avec .NET. […]

LikeLike

Hi Alois,

Have you checked the performance and size for binary formatter when implementing ISerializable and private/protected ctor? It shoud give binary formatter a chance.

LikeLike

Hi Aleh,

I have compared the serializers with one class which contains only the necessary attributes to make serialization work. Implementing the ISerializeable interface will only add the method GetObjectData(SerializationInfo, StreamingContext) which will not make things faster except if you skip the serialization of all members. See what MS experts have so say about that interface at https://github.com/Microsoft/perfview/blob/master/src/FastSerialization/FastSerialization.cs.

LikeLike

[…] Comparatifs des serialisateurs […]

LikeLike

A great article once again! Thank you Alois!

LikeLike

Great analysis with plenty of detail, and consideration given to all of the questions I had while looking for a good de/serializer. Thanks for the post! I really hope this stays up to date 🙂

LikeLike

I like this analysis. I’m looking to create yet another serializer that is largely as compatible with private constructors and data as, but much faster than, BinaryFormatter, but that still runs on .NET3.5 and older, so this resource is valuable. My searches did turn up a few serializers that aren’t mentioned in this comparison. In fact, every time I’ve searched over the past year or so, I’ve found new ones. I wonder whether an honourable mentions table (just below the versions you’ve used) of the serializers you haven’t included, and why, would be helpful.

Also, do you take into account garbage collection cost? Some serializers will have a heavier GC cost than others, and this can sometimes end up not being counted. Ideally, you’d benchmark each serializer until you reach steady state memory usage and then do all the time measurements. That would obviously be slow.

Also, do you take care to lock your CPU multiplier and turn off background software when running the tests?

Here are the unmentioned ones:

https://github.com/Toxantron/CGbR

https://github.com/tomba/netserializer

https://github.com/salarcode/Bois

https://github.com/neuecc/ZeroFormatter (probably easy to dismiss due to it needing all accessors to be virtual)

https://servicestack.net/text (although ServiceStack sells software, this portion appears to be free to use)

(and the ones mentioned in other comments)

(also, if you make the table, the ones mentioned in the article)

I’d be happy to help with some of this, especially if you weren’t expecting to maintain a comparison in the long term.

LikeLike

I haved added to the test suite (https://github.com/Alois-xx/SerializerTests/tree/master/Serializers) Bois, ZeroFormatter, ServiceStack.Text, GroBuf, FlatBuffers and MsgPack.Cli

I will update the blog and graph once I have a thorough understanding of all the new performance numbers which are pretty impressive.

LikeLike

Now all results are online together with the released .NET 4.7.2.

LikeLike

(To add to my previous list: https://github.com/skbkontur/GroBuf )

LikeLike

Thanks! I will check out these and add them if they are performance wise comparable or even better than protobuf while still beeing able to cope with my simple and complex serialization test. I think at latest I will update the charts once .NET 4.7.2 comes out which finally fixes BinaryFormatter (with an switch in your app.config).

LikeLike

Have you looked into http://google.github.io/flatbuffers/ or https://github.com/neuecc/ZeroFormatter ? Both advertise as having better performance then protobuf.

LikeLike

flatbuffers and ZeroFormatter look very interesting. Indeed. I will check them out and post the results with the next update of the article.

LikeLike

A big plus of protocol buffers in some cases is varints. It’s why I use protobuf instead of something like flatbuffers for games. If most of your data can be represented as integers then varints are usually going to win out.

LikeLike

[…] There have been many serializers added since the article https://aloiskraus.wordpress.com/2017/04/23/the-definitive-serialization-performance-guide/ was written which warrants a post on its own. The performance numbers were updated but not all of […]

LikeLike

expression trees as switch from binaryformatter

for moderate to large sized generic objects do the job :

https://www.codeproject.com/Articles/1111658/Fast-Deep-Copy-by-Expression-Trees-C-Sharp

LikeLike

Hi akraus1, thanks for detailed article.

have a question here that why utf8json not considered?

LikeLike

The test suite was already updated. Yes it is the fastest Serializer out there. When .NET Core 3 is out I will update the measurements along with other new serializers.

LikeLike

The Slim Serializer suite is now .NET Standard and runs on Core and Net FX.

Here:

https://github.com/azist/azos

When SlimSerializer is properly configured (KnownTypes,BatchMode types) it yields tiny datagrams as type names are NOT emitted into stream.

The Slim serializer is complemented with Arow (Amorphopus data row) serializer which yields 2x-3x performance of Slim and version upgrades at the cost of Arow metadata decorations.

https://github.com/azist/azos/blob/master/src/testing/Azos.Tests.Nub/Serialization/ARowTests.cs

LikeLike

This is a really good resource, thank you for the hard work

LikeLike