I like to experiment a lot which often leads to surprising results. Sometimes I compare performance engineering to quantum mechanics: In quantum mechanics a measurable value (observable) is not determined until the measurement is performed. I stretch quantum mechanics here and claim that you have bad performance if you never measure. Or to put it into another way:

The probability to get a well performing system without quantitative repeatable regular performance measurements is zero.

When you look deep enough you will find not so well known things. Memory allocation and access performance is such a topic which could span whole books because it is such a fundamental thing which most application developers are not aware of.

What do I mean with that? Lets perform an experiment:

- Allocate 2000MB of memory.

- Measure the access performance of every 4096th byte of the allocated memory.

- Repeat the measurement to get consistent results a second time.

Below is a small C++ application to do this:

#include <chrono> class Stopwatch { public: Stopwatch() { _Start = std::chrono::high_resolution_clock::now(); } void Start() { _Start = std::chrono::high_resolution_clock::now(); } std::chrono::milliseconds Stop() { _Stop = std::chrono::high_resolution_clock::now(); return std::chrono::duration_cast<std::chrono::milliseconds>(_Stop - _Start); } private: std::chrono::high_resolution_clock::time_point _Start; std::chrono::high_resolution_clock::time_point _Stop; }; #pragma optimize( "", off ) void Touch(void *p, size_t N) { char *pB = (char *)p; char tmp; for (size_t i = 0; i < N; i += 4096) { tmp = pB[i]; } } #pragma optimize("", on) void main() { const int NBytes = 2 * 1000 * 1024 * 1024; // 2000 MB of memory char *bytes = new char[NBytes]; Stopwatch sw; Touch(bytes, NBytes ); // touch every 4096th byte auto ms = sw.Stop(); printf("Did touch %d bytes in %lld ms\n", NBytes, ms.count()); sw.Start(); ms = sw.Stop(); printf("Did touch 2 %d bytes in %lld ms\n", NBytes, ms.count()); }

When we execute it the numbers look promising

D:\>\MemAllocPerf\x64\Debug\MemAllocPerf.exe

Did touch 2097152000 bytes in 13 ms

Did touch 2 2097152000 bytes in 0 ms

13ms for the first access time and 0ms the second time. This is pretty good even for a debug build. For completeness lets execute the same thing as Release build because everyone tells you that you should never ever trust performance values from debug builds.

D:\>\MemAllocPerf\x64\Release\MemAllocPerf.exe

Did touch 2097152000 bytes in 377 ms

Did touch 2 2097152000 bytes in 0 ms

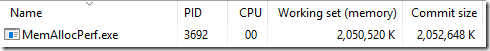

Second time still looks good but what has happened to the first time access performance? The release build has become 30 times slower! How can this be? Lets step though it with a debugger and check the memory consumption in Task Manager after the allocation but before we have touched the memory.

Debug

Release

Well that is interesting. Both versions have committed 2000 MB of memory but the debug version has it in its working set already. The release build consumes basically zero physical memory. Just in case you need quick recap what commit size and working set means:

- Commit size is the amount of memory you did allocate with new, malloc, calloc, GlobalAlloc, …

- Working Set is the physical memory the operating system has assigned to your process (real ram chip usage). The working set can be smaller because the operating system can page out data of your process to make room for other also memory hungry applications.

Ok so this means the OS did page out my data for the release build? Well no not in this case. There is a wrinkle to it. All operating systems try to be as lazy as possible to move the costs of memory allocation and usage at the latest time possible.

The Operating System View Of Memory Allocation And Access

When an allocation happens the OS first needs to check if the process has enough address space left for the allocation. This is especially true for x86 processes which can allocate only 4 GB of memory where we can run out of free addresses where to put our allocation because the memory is fragmented like below. We could allocate the memory but we have no address space hole big enough to satisfy the reservation request.

I have never seen that happen on x64 processes but it is a pretty common issue on x86 processes. This is called reserving memory in Windows lingo. Apart from checking if enough address space is available in the process nothing happens which is therefore a super fast operation.

The second stage is to commit memory. The new[], malloc functions will usually reserve and commit the memory in one go with a call to VirtualAlloc on Windows. This time the OS needs to do some further checks.

- Check if allocation size > Commit Limit

- The Commit Limit is the maximum memory all applications together can allocate. It is the sum of the physical memory + size of the page file.

- If the page file has not a fixed size the OS might need to grow the page file for the requested memory which can take quite some time to ensure that the allocation request can be served from physical or page file baked memory.

After reserving the address space and committing the memory the OS guarantees that your newly allocated memory can be served by the OS either from the page file or (more likely and performant) from physical memory.

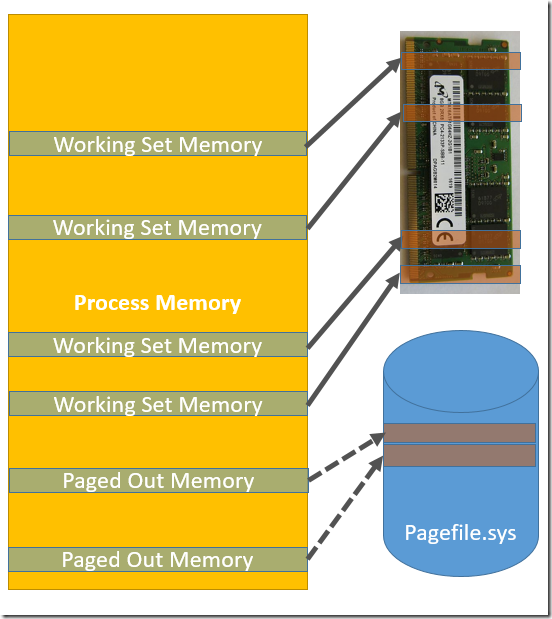

(RAM Image Source https://en.wikipedia.org/wiki/File:Laptop_RAM.jpg)

You see the dotted lines? The OS only guarantees that you can access the memory but it is still not assigned to your process. The OS has returned you a pointer to memory but you still do not have the memory in your process. All memory pages in your process are still empty! Now comes the expensive part. But this involves no API call at all. To force the OS to actually assign the memory to your process you only need to access it. When you access an empty page the CPU will trigger an exception (page fault) and call an back into the operating system. At this time the OS will actually assign the memory to your process working set “database” where the OS keeps track which physical pages are baked by real memory or the page file.

The operation to add memory to your process working set is called page fault. If only RAM needs to be assigned to your process it is called soft page fault (fast). If you access paged out memory a hard page fault happens (slow, …. very slow) which will cause the OS to start a read operation from the hard disk which can be a lengthy undertaking.

After the soft/hard page fault your application finally takes over and you can access the memory without any OS interference.

If the system runs low on memory some least used memory pages are removed from your working set and its contents are put into the page file. I have indicated that with the dotted lines pointing to the page file in the picture above. The next time you access the memory you will get hard page faults which are the source of most sluggish system behavior. It has become much less of a problem if you are lucky enough to have the page file on an SSD which have pretty good random access times.

Ok that was a pretty long excursion into the details of memory management. But why is the debug build so much faster and why is all of the memory after the allocation already in our process working if I use the debug build? When we examine the memory contents which was returned by allocation request we find some byte pattern (cd cd)

If you look further what that means at https://stackoverflow.com/questions/370195/when-and-why-will-an-os-initialise-memory-to-0xcd-0xdd-etc-on-malloc-free-new you will find that the C-runtime initializes and and hence access the memory before returning the pointer to the calling code. That is the reason why the debug build was so much faster. The soft fault performance hit did happen already at allocation time because the memory was initialized to the CD CD byte pattern. That is one of the very few cases where the measured performance of a debug build is much better compared to a release build because the most expensive part of memory access has happened before we did start he measurement.

Memory Copy And Soft Fault Performance Do NOT Scale On Windows

That was an interesting case but I am getting just started. Lets suppose we want to read a large file from the disk as fast as possible. Most often the file was accessed already by the application some time ago and it is already in the file system cache. If you execute the read operation the OS has nothing to do except to copy the memory from the file system cache to the buffer in your application. When the buffer was just freshly allocated by new[] how fast can we get? Copying the data from the file system cache is a problem that calls for parallelization to speed up things. The interesting thing is how fast can we get if multiple threads are copying in parallel data from A to B.

Since memory access has some hidden costs upon first access it makes sense to measure the memory copy performance for a freshly allocated array and a second time with the same one. The test essentially should do

- Allocate a large array e.g. 2000 MB

- Fill it with random data which will be our source array

- Allocate a 2000 MB target array

- Start 1-n threads

- Each thread copies a sub range of source to destination

- Measure the time stop step 4-5

- Repeat Step 4-6 for a second measurement

First lets check the results on different CPUs when we hit a “warm” destination array which will exhibit no soft page faults.

From these numbers we can deduce the “raw” memory copy performance which at some point saturates the CPU memory bus. The numbers obtained here differ by a large margin with the documented ones:

| CPU | Theoretical Memory Bandwidth GB/s | Max Memory Copy Performance GB/s |

| I7 4770K | 25.6 | 9,3 |

| E5 2623 v3 | 59 | 10,3 |

| Xeon Gold 6148 | ??? | 40,8 |

At least for my home machine (the 4770K) I get ca. 9.3 GB/s which is off by a large margin of my 25.6 GB/s. I assume that the maximum memory bandwidth was measured either for read or write operations, but not a parallel read/write operation which would mean that I can multiply my measured values with a factor two. Then I would arrive at ca. 18 GB/s which seems to be ok if I attribute the rest to the cache controller which needs also some memory bandwidth. The raw memory copy performance depends on the memory bus and the used memory modules. What happens when I replace from a full memory bank one module? You guessed it: I did another experiment with my home machine. As expected the memory copy performance did drop by 50% from 9,3 GB/s down to 4,7 GB/s which gives a strong hint that memory bandwidth saturating applications should run always on machines which have full memory banks.

From the graph above it is clear that having more cores is better up to a certain point where the memory bandwidth is reached and more cores do not help anymore. The brand new Xeon Gold CPUs show an impressive performance where up to 10 memcopy threads did still add performance. Now lets change the use case and measure for the first access time which includes soft page faults.

Well that is interesting. Adding more cores degrade the soft page fault performance by a large margin. The speed gains added by parallel memcpy are far less than one would expect. To isolate the issue we can measure the page touch time (soft fault performance) with a similar application like the one above just with some multi threading added. We then get this

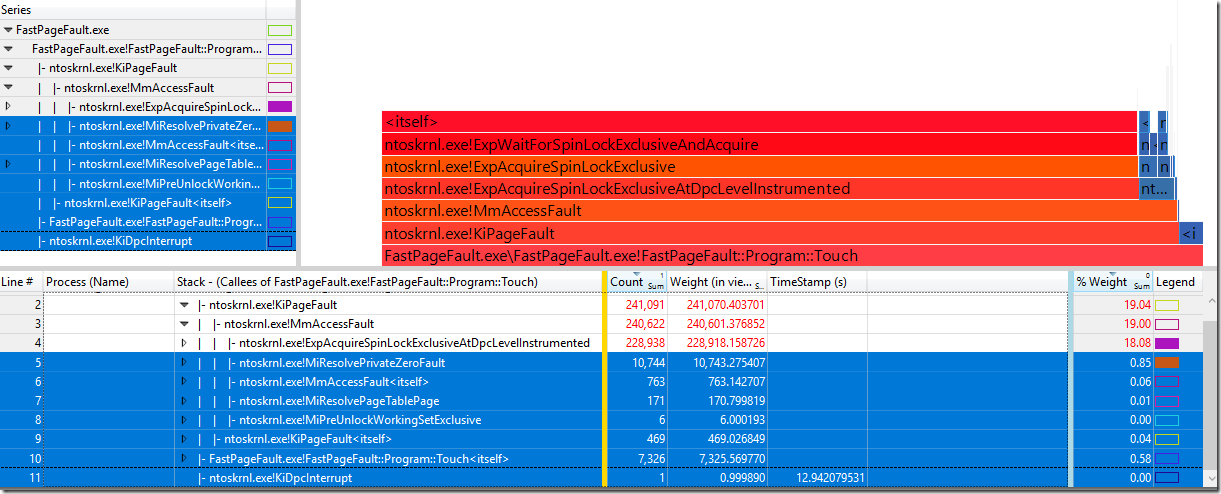

The soft page fault performance of Windows 10 does not scale with the numbers of cores. Instead it decreases with the numbers of cores! There seems to be a magic number around 4 concurrent touch threads where we become faster but then the valley is left and the soft fault performance in all cases gets worse compared to the single threaded use case. If something unexpected like this happens it is time to use a profiler to drill deeper. Below is a CPU sampling graph which shows the parallel page touch with 1-40 threads for a 2000 MB array.

As I have feared there is some internal locking in the Windows soft fault implementation which makes soft faulting a mostly single threaded operation. If multiple threads try to access the memory we get high CPU times in the method ExpAcquireSpinLockExclusiveAtDpcLevelInstrumented which wastes many CPU cycles until it finally gives up which ends in a highly contended lock. Remember: Locks do not scale. That is the reason why the small actual soft page fault work (yellow) is constant while the overhead causes by the lock explodes. This makes not much sense in my opinion when we get more and more cores to play with but the soft fault implementation still uses a process wide lock.

Another view of the same date like above named Flame Graph shows the overhead even better. The selected part below is the actual useful work and the huge rest is the overhead of the Spinlock.

One would think that soft page fault is an essential OS service that should be implemented as fast as possible. Apparently that is not the case for Windows 8 and 10 (https://stackoverflow.com/questions/45024029/windows-10-poor-performance-compared-to-windows-7-page-fault-handling-is-not-sc). The issue described there was solved by using VirtualLock which is essentially soft faulting the pages from one thread which is much better than to do it concurrently from many threads as you can see from the graph above. I do not know what you think but I have the strong feeling that Microsoft should improve the soft page fault code to finally enter the multi core era. It would be interesting to compare the numbers with Linux because Linus Torvalds seems to be vigilantly looking at the soft page fault implementation in the Linux kernel.

With SSDs getting nearly as fast as RAM the soft fault performance becomes a limiting factor in multithreaded applications which would otherwise be memory bus constrained.

Even seemingly simple things like allocating and accessing memory can have dramatic impact on application performance and scalability. The test application from above is pretty simple but if you start asking why the performance is as it is and you try to optimize it you quickly reach operating system limits and not much later the limits of the hardware. That is actually a good thing because it means that your application is fast as hell.

If you want to test the soft page fault/memcopy performance for yourself you can check out https://github.com/Alois-xx/FastPageFault which should be pretty self explaining. That was the application I did use the produce the charts above.

Update 1

Windows 10 Fall Creators update contains a fix for the soft page fault performance. First some numbers from my home machine with 4 physical cores:

The use case was copying a 2000 MB buffer into not yet touched memory with 1-N threads.

Creators Update

Due to the internal locking we did never get close to the actual memory bandwidth because the kernel was busy with its Spinlocks while updating the data structures to add the pages to our process working set. The copy performance did peak around 6,5 GB/s

Fall Creators Update

The soft page fault implementation is now much more scalable and we can now max out our memory bandwidth of 9,xGB/s with only 3 cores while we can fully distribute the soft page fault work across threads!

When we zoom deeper into the soft page fault performance we find that even the single thread soft fault performance has become 43% faster and it scales much better now.

Below is the Spinlock CPU vs actual work shown for Creators Update

and here for Fall Creators Update

The lock is still there but much less time is spent in locking things which is a good thing. How did the MS engineers improve the soft page fault implementation by nearly a factor two? To see this the WPA diff view is helpful along with some Excel magic. The diff view in WPA is nice but it still lacks in my opinion some important features

- There is no way to mark methods which were removed or added except by drilling to the bottom of the call stacks which is very tedious

- I want to filter only for added/removed methods which would be very helpful to spot the actual difference and not the consequence deeper in the call stacks

Anyway. There is nothing Excel cannot fix for us. Below is the pimped diff view of the page touch performance test.

What stands out is that the Fall Creators Update soft page fault implementation has far less method calls. The Windows Kernel internal List (all red marked list management methods) to maintain the page list was removed in favor of a more simple data structure with better cache locality and less locking. Besides getting rid of the kernel linked list the biggest change seems to be that by default the page size has been increased from 4 KB to 64 KB which means that the kernel needs to update 16 times less often the page table structures which seem to be the biggest change. At least that is my impression by noticing that the method MiGet64KPage consumes most CPU and looks like it was introduced with the Fall Creators Update.

What about Windows Server?

According to MS support the soft page fault fix should have made it into Windows Server 1709 which is a desktop less server which is best for container and cloud workloads. That is nice but I need a fix for Server 2016. The Windows Server 2016 soft page fault performance affects all applications, especially the memory hungry ones. Moving forward to a not compatible server edition which is still beta with a shorter long term support contract is not an option.

It is pretty hard to get hard facts from MS support which issues is fixed with which OS version. The question: Is the issue in that ticket fixed in the build I am running? seems no longer to be easily answerable. That definitely should be improved.

Just curious, were these tests done on Server 1607 or 1709?

+1 for someone comparing this to Linux or FreeBSD on a comparably beefy Xeon/Epyc server.

LikeLike

The test were done on Windows 10 Creators Update (15063.0.amd64fre.rs2_release.170317-1834) and Win Server 2016 14393.1715.amd64fre.rs1_release_inmarket.170906-1810

LikeLike