this is about a case where I did wonder why my Windows installation at home is running fast but the Windows machine inside a company network is so slow, especially boot. “Nothing exists independently“, a verdict from Dalai Lama applies very much to performance engineering. I am not sure if he had that in mind but be prepared for unexpected twists during the following posts.

First a little background what you can expect in a corporate Windows network. Normally you have an Active Directory installation which takes care of user management. This involves rolling out central Group Policies defined by your administrators, deploying software centrally with e.g. MS System Center, rolling out virus scanners and virus pattern updates. This is happening in the background and you will not notice anything except that sometimes stuff is downloaded and installed and you are asked to reboot because some newly installed software or Windows update needs one.

After a reboot I was noticing that my machine was always working feverishly for the next 5 minutes on at least 2 cores. That is not good. Task manager was showing on my 4 Core notebook up to over 50% CPU utilization which means that I could barely work when something demanding was needed. The natural reaction to anyone who is dealing with performance issues on windows is to turn on ETW tracing. Why? Because the recording tool WPR is part of every Windows 10 installation. If you want to record for longer than one minute I would set the sampling rate down to 10ms to get not too much information like this:

C>wpr -start GeneralProfile -start CPU -start DiskIO -start FileIO -setprofint 100000

Reproduce Issue

C>wpr -stop c:\temp\PerfIssue.etl

The CPU profile of WPR will always record context switch events which is interesting but produces a lot of data. I am still not sold to wpr as the successor to xperf because you need to extract the builtin profiles of the exe, create your own derivative profile and then save it to a file which is error prone and not easy to tailor. But for short recording durations it is okay to record pretty much anything so I do not complain. When you want to analyze the data you still need to download the Windows Performance Toolkit (WPT) from the Windows 10 SDK. Then you can apply the OverView.wpaProfile profile from ETWController which comes with many useful stacktags located here: https://github.com/Alois-xx/etwcontroller/tree/master/ETWController/ETW (get the complete folder).

We see a large CPU activity in WmiPrvSE.exe, svchost.exe and lsass.exe. What is this all about? On a live machine you can use ProcessHacker (the current version is 3.0.2824 do not download the old version 2.xxx from 2016) to easily see which processes are consuming CPU. A very useful but little known feature is that you can filter by multiple processes separated with | in the input window on the toolbar. It filters on all visible columns.

When you hover over the processes you immediately see which service or WMI provider is hosted in this process which can be very helpful.

WmiPrVSE.exe is a WMI Provider host process where the WMI provider objects are living. Whenever you call into a WMI object the call ends up in one of the several provider processes. The list WMI Providers tells us that this process contains the Event log provider. Lets turn to the next most CPU consuming process

This is the Event Log service process. Looks like the WMI provider calls into the Windows Event Log Service. Interesting.

lasss.exe is the process which manages all security related things inside Windows. This one tells you if you get access denied or not.

The last one is a Windows services which is the WMI service. That are a lot of different processes which are consuming CPU. But why? It looks like some WMI stuff is dealing with the Event Log but how can we find out which WMI query this could be?

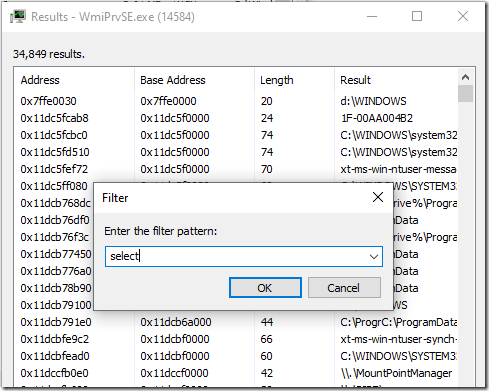

The brute force approach is to check the memory contents of the WmiPrvSE.exe process and search for WMI queries which contain e.g. the select keyword. ProcessHacker can actually scan for strings in any process. Lets do that as quick investigation:

And behold we immediately get a nice list of current and past queries executed by WMI:

How can we verify that which WMI query was so slow? We do not need to write a C++/C# application. Instead we use Powershell which can execute any WMI Query with the Get-WmiObject -query “select …” cmdlet. Lets try to query all Windows Event Logs for errors (eventtype=1) and measure its duration with Measure-Command :

PS C:\>Measure-Command -Expression { Get-WmiObject -query "select Eventcode,Eventtype,Logfile,Message,Sourcename,TimeGenerated,user from Win32_NTLogEvent where eventtype = 1" } Minutes : 4 Seconds : 5

Looks like we have found it. What is so slow with this WMI Query? The first observation is that the supplied query contains a time filter which tries to get events from the last 3 days. Normally you want to query a specific event log but this query reads from all event logs on the system all error (eventtype = 1) messages.

Up to now we have identified an expensive WMI Query which reads all error events from the last 3 days. When you have a performance problem you have two options now:

- Find out who did call the query and prevent it from running

- Identify the root cause of the bad WMI Event Log Query performance

Lets start with option 1. Since Windows 8.1 we can enable WMI Activity Tracing and query for the WMI Execution events.

To enable WMI Tracing execute this on an elevated command prompt

C>wevtutil sl Microsoft-Windows-WMI-Activity/Trace /e:true

To query for WMI Query execution events we need to filter for event 11 which contains also the initiating process id

C>wevtutil qe Microsoft-Windows-WMI-Activity/Trace /q:"*[System[EventID=11]]" /f:text | findstr /i ExecQuery CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 42920; OperationId = 42932; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT * FROM Win32_PhysicalMedia WHERE Tag="\\\\.\\PHYSICALDRIVE1"; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientProcessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 42992; OperationId = 42993; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT AdapterRAM FROM Win32_VideoController WHERE AdapterRAM >= 2147483648; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientPr ocessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 42996; OperationId = 42997; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT AdapterRAM FROM Win32_VideoController WHERE AdapterRAM >= 1073741824; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientPr ocessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 43000; OperationId = 43001; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT AdapterRAM FROM Win32_VideoController WHERE AdapterRAM >= 4293918720; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientPr ocessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 43046; OperationId = 43047; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT AdapterRAM FROM Win32_VideoController WHERE AdapterRAM >= 2147483648; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientPr ocessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 43050; OperationId = 43051; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT AdapterRAM FROM Win32_VideoController WHERE AdapterRAM >= 1073741824; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientPr ocessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {00000000-0000-0000-0000-000000000000}; GroupOperationId = 43054; OperationId = 43055; Operation = Start IWbemServices::ExecQuery - root\cimv2 : SELECT AdapterRAM FROM Win32_VideoController WHERE AdapterRAM >= 4293918720; ClientMachine = SKYRMION; User = NT AUTHORITY\SYSTEM; ClientPr ocessId = 6276; NamespaceName = 132184730473612738 CorrelationId = {C9FD2187-9C4E-0004-3FBF-FEC94E9CD501}; GroupOperationId = 43058; OperationId = 43059; Operation = Start IWbemServices::ExecQuery - root\cimv2 : select Eventcode,Eventtype,Logfile,Message,Sourcename,TimeGenerated,user from Win32_NTLogEvent where (type = 2 ) ; ClientMachine = SKYRMION ; User = Skyrmion\Alois; ClientProcessId = 1112; NamespaceName = 132183970163500741

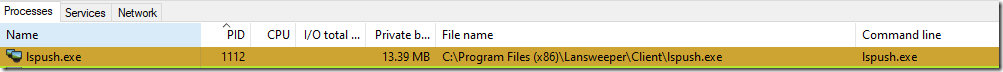

With the process ID it is trivial to identify which process did initiate the WMI Query. Who is it?

lspush.exe? Never heard of. This one is not from Microsoft

A search later we get to https://www.lansweeper.com/knowledgebase/how-to-scan-with-lspush/. Lansweeper is a network inventory tool which gathers client machine information on Windows in a general way by e.g. calling it at boot or login via a group policy. There is a free edition which I did install at home to reproduce the issue. And lspush did show the same behavior on my machine:

It is of course much easier to spot the issue when you see the Lansweeper UI where the scan operation is even visible in the UI. In corporate networks such things are normally run in the background and you need to resort to advanced tooling to drill deeper. I have contacted Lansweeper if there is something I could to about it. I have got as answer back that other customers have complained as well but this issue will only be tackled when enough customers do complain. Currently there is NO way to turn this offensive event log query off which is frustrating. I know Lansweeper has many customers but not many seem to have been able to correlate lspush induced CPU actvitiy in WMI related processes and Lansweeper yet. If you are a Lansweeper customer and you are using lspush and not the agent approach to scan your machines you definitely should reach out to their support to raise the priority of the backlog item. Lansweeper is used by many big companies (https://www.lansweeper.com/) where definitely more than one will experience this issue. When addressing this the first time to our IT guys they did not believe me because the Lansweeper UI shows also how long the scan was taking:

According to the Lansweeper UI my scan time is in the sub second region. What this report is failing to show are the scans which cannot be turned off and how long they did take…

In meantime if you are a Lansweeper customer measure how long lspush.exe is executing in your setup and call their support to prevent wasted CPU cycles at scale and bad user experience after logon. This issue is not happening everywhere which brings me back to point 2. Why is this Eventlog query so slow? This is the topic for the next post.

[…] The WMI Query From Hell (Alois Kraus) […]

LikeLike

The Microsoft-Windows-WMI-Activity events can be also captured via xperf and analyzed in WPA together with CPU sampling data to have it in 1 place::

https://superuser.com/a/949470

LikeLike

Hi Andres, thanks yes that is a good idea. You mean something like: https://github.com/Alois-xx/WMIWatcher

That should make such analysis much easier in the future. That is on my todo list to blog about it.

LikeLike

No need for a separate application, a good ol’ regions of interest file can do all the heavy lifting for you.

All you need to do is capture Microsoft-Windows-WMI-Activity, open the trace in WPA, and apply this XML file via Trace/Trace Properties/Regions of Interest Definitions/Add…: https://gist.github.com/Donpedro13/a9ec346f286334412a6bf25dcc435aeb

You can sort the regions by duration, that will pinpoint the culprit instantly.

Aside from sparing you the effort of writing a whole application, this also has the added benefit of easy correlation with other data in your trace, such as CPU usage.

LikeLike

@Donpedro: That is a nice one. I have tried your region file and found it to be working for some queries but not for all. If you execute e.g. a WMI query with powershell it will not be shown in your region at all because the operation ids will never match up. I did write the WmiWatcher application because I wanted all queries including the bad pollers which are started early from system services to get in an easy and automated way. It really depends how you execute your queries. So far I have not found an always working way to get the WMI query duration in all cases.

LikeLike

[…] the problems caused by WMI (either directly or indirectly) shows an increasing trend. Take this, this, or this case, for […]

LikeLike