With .NET Core 3.0 and .NET 4.8 released it is time to check again which serializer performs best. The older overviews still contain some interesting insights because the focus tends to shift with year to year a bit:

- https://aloiskraus.wordpress.com/2017/04/23/the-definitive-serialization-performance-guide/

- https://aloiskraus.wordpress.com/2018/05/06/serialization-performance-update-with-net-4-7-2/

Time moves on, some libraries are no longer maintained and no longer compatible with .NET Core 3. These were removed:

| Name | Reason |

| ZeroFormatter | Project was abandoned by maintainer in favor of MessagePack-CSharp Github issue. Crash on .NET Core 3 with System.BadImageFormatException: ‘Bad IL format.’ |

| Wire | Deserialization fails. See Issue1 and Issue2. Besides this there is no project Activity since 2017. |

| Hyperion | Serialization does not work due to accessing internal fields of Exceptions. See Issue. |

Tested Serializers

|

Serializer |

DataFormat/ Protocol |

Version Tolerant |

# Commits / Year Of Last Commit at 8/2019 |

| Apex | Binary | No | 143 / 2019 |

| BinaryFormater | Binary | Yes | ? |

| Bois | Binary | ? | 181 /2019 |

| DataContract | Xml | Yes | ? |

| FastJson | Json | Yes | 138 / 2019 |

| FlatBuffer | Binary | Yes | 1701 / 2019 |

| GroBuf | Binary | Yes | 322 / 2019 |

| JIL | Json | Yes | 1272 / 2019 |

| Json.NET | Json | Yes | 1787 / 2019 |

| JsonSerializer (.NET Core 3.0) | Json | Yes | ? |

| MessagePackSharp | Binary, MessagePack | Yes | 715 / 2019 |

| MsgPack.Cli | Binary, MessagePack |

Yes | 3296 /2019 |

| Protobuf.NET | Binary Protocol Buffer |

Yes | 1018 / 2019 |

| SerivceStack | Json | Yes | 2526 /2019 |

| SimdJsonSharp | Json | No | 74 /2019 |

| SlimSerializer | Binary | No | ~100 /2019 |

| UTF8Json | Json | Yes | 180 /2019 |

| XmlSerializer | Xml | Yes | ? |

New Additions since 2018 are Apex, SimdJsonSharp, Utf8Json and JsonSerializer which comes with .NET Core 3.

Test Execution

You can clone my Serializer Testing Framework: https://github.com/Alois-xx/SerializerTests and compile it. Then execute RunAll.cmd from the SerializerTests directory which will execute the tests on .NET Framework and .NET Core 3. I have put the output directory above the source directory (e.g. SerializerTests\..\SerializerTests_hh_mm_ss.ss\) to not check in measured data by accident.

Test Results

And another time for .NET 4.8 were some of the new .NET Core only serializers are missing:

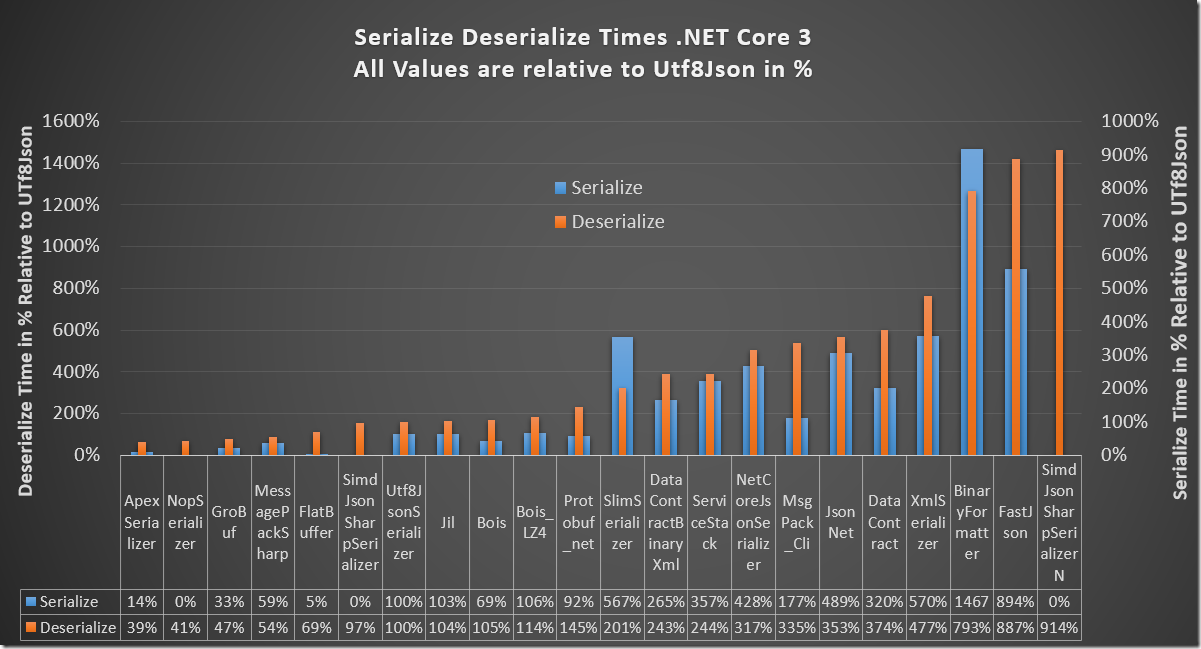

The times are shown for .NET Core 3.0 and .NET 4.8 for 1 million objects sorted by deserialize time. The tests were performed on my i7-4770K CPU @ 3.50GHz on Win 10.0.18362.356 (= 1903) with 16 GB RAM. Normally you read data more often than write it. This is the reason why I did put emphasis on the deserialize times. NopSerializer and SimdJsonSharpSerializer test only deserialize times hence the 0 in the graph for the serialization times.

But You Are Doing It All Wrong Without Benchmark.NET

How accurate are the measured numbers? As usual you have many factors such as virus scanners, Windows update downloads, and other things which can show different timings in the region of 10%. For more memory intensive (read slower) serializers it can reach sometimes up to 30% because page fault performance can differ quite a lot and has many influencing factors. Although the absolute timings may vary the relative ordering remains pretty stable when you measure at one point in time. The measurement error during one test run is < 5% which is pretty good to decide which serializer is faster for your specific use case. Every test was executed 5 times and the median value was taken. I have experimented with 10 or more runs but the resulting numbers did not stabilize because if the test suite runs for an extended period of time (hours) then the machine may reach different states (like downloading and unpacking a windows or other application update) which did the introduce systematic errors. For that reason I did get back to rather short test timings to ensure that the relative performance of the tested serializers is comparable. And no I have not used Benchmark.NET because it is only good for micro benchmarks. It goes into great length to get stable numbers but the price for that is that it executes a test which runs for one microsecond for several minutes. If the same approach would be used for the serializers then the test suite would run for days and you still would not know if the numbers are repeatable the next day because this are not real micro benchmarks which depend only on CPU. Besides that Benchmark.NET makes first time init effect measurements pretty hard to test the JIT timing overhead. I am aware that there is a repository of Benchmark.NET enabled performance test named dotnet Performance on github where I have added the .NET Core 3 testcase for IndexViewModel as it was mentioned in https://github.com/dotnet/performance/pull/456/commits where where I get with Benchmark.NET tests only a perf increase of 30% with the new .NET Core 3 Json serializer. I find it strange the the current repo contains no tests for the new Json Serializer of .NET Core 3. I have added the tests as any other for the new Json Serializer of .NET Core in the .NET Testsuite like this:

#if NETCOREAPP3_0 [BenchmarkCategory(Categories.CoreFX)] [Benchmark(Description = "NetCoreJson")] public T NetCoreJson_() { memoryStream.Position = 0; return (T) System.Text.Json.JsonSerializer.DeserializeAsync(memoryStream, typeof(T)).Result; } #endif #if NETCOREAPP3_0 [GlobalSetup(Target =nameof(NetCoreJson_))] public void SetupNetCoreJson() { memoryStream.Position = 0; System.Text.Json.JsonSerializer.SerializeAsync<T>(memoryStream, value).Wait(); } #endif

Json_FromStream<IndexViewModel>

| Method | Mean | Error | StdDev | Median | Min | Max | Gen 0 | Gen 1 | Gen 2 | Allocated | |--------------------------- |----------:|---------:|---------:|----------:|----------:|----------:|--------:|-------:|------:|----------:| | Jil | 64.25 us | 0.159 us | 0.124 us | 64.23 us | 64.07 us | 64.45 us | 6.4037 | 0.7684 | - | 26.7 KB | | JSON.NET | 81.11 us | 0.808 us | 0.756 us | 81.12 us | 79.98 us | 82.31 us | 8.3682 | - | - | 34.27 KB | | Utf8Json | 38.81 us | 0.530 us | 0.496 us | 38.66 us | 38.29 us | 39.76 us | 5.1306 | - | - | 21.58 KB | | NetCoreJson | 49.78 us | 0.692 us | 0.648 us | 49.51 us | 49.07 us | 51.00 us | 5.3066 | 0.5896 | - | 21.91 KB | | DataContractJsonSerializer | 343.39 us | 6.726 us | 6.907 us | 340.58 us | 335.44 us | 355.18 us | 20.3125 | 1.5625 | - | 88.38 KB |

Then I executed MicroBenchmarks.exe with testcase #92 which tries the deserialization tests on IndexViewModel. In my test suite I only test stream based serializers because I want to support also large datasets which need no transformation into a string before the serializer is willing to deserialize the data. You can extend the Json_FromString which leads to similar results:

Json_FromString<IndexViewModel>

| Method | Mean | Error | StdDev | Median | Min | Max | Gen 0 | Gen 1 | Gen 2 | Allocated | |------------ |---------:|---------:|---------:|---------:|---------:|---------:|-------:|-------:|------:|----------:| | NetCoreJson | 51.52 us | 0.498 us | 0.441 us | 51.49 us | 50.78 us | 52.53 us | 8.2508 | 0.4125 | - | 34.2 KB | | Jil | 42.83 us | 0.414 us | 0.388 us | 42.73 us | 42.27 us | 43.56 us | 5.6662 | 0.6868 | - | 23.49 KB | | JSON.NET | 77.07 us | 0.580 us | 0.542 us | 77.22 us | 76.19 us | 78.12 us | 7.6523 | 0.9183 | - | 31.31 KB | | Utf8Json | 42.64 us | 0.263 us | 0.246 us | 42.65 us | 42.27 us | 43.06 us | 8.1967 | 0.3415 | - | 33.87 KB |

This emphasis again to not blindly trust random performance numbers which are mentioned on github. This one was created with MicroBenchmarks.exe –filter “MicroBenchmarks.Serializers.Json_FromString*” after adding NetCoreJson to the mix. There we find again a perf increase of 32% compared to Json.NET measured with Benchmark.NET. But this is nowhere the originally factor 2 mentioned by Scott Hanselmann. Perhaps at that point in time the serializer did not fully support all property types which did result in great numbers. To combat confirmation bias I write the serialized data to disk so I can check the file contents to verify that indeed all required data was written and not e.g. private fields were omitted by this or that serializer which is a common source of measurement errors when a serializer has too good numbers.

Show Me The Real Numbers

The big pictures above need some serious explanation. In my first article I have shown that serialized data size and performance correlate. It is common wisdom that binary formats are superior to textual formats. As it turns out this is still somewhat true but the battle tested binary protobuf-net serializer is beaten by 50% on .NET Core by UTF8Json a Json serializer. There is one guy who did write MessagePackSharp, Utf8Json, ZeroFormatter and others named Yoshifumi Kawai who really knows how to write fast serializers. He is able to deliver best in class performance both for .NET Framework and .NET Core. I have chosen the fastest version tolerant Json serializer Utf8Json and normalized all values to it. Utf8Json is therefore 100% for serialize and deserialize. If something is below 100% it is faster than Utf8Json. If it is 200% then it is two times slower. Now the pictures above make hopefully more sense to you. Humans are notoriously bad at judging relative performance when large and small values are sitting next to each other. The graph should make it clear. The new Json Serializer which comes with .NET Core 3 is faster (NetCoreJsonSerializer) but not by a factor 2 as the post of Scott Hanselman suggests. It is about about 30% faster in the micro benchmarks and ca. 15% faster in my serializer test suite which de/serializes larger 1M objects.

Below is the same data presented in absolute times for .NET Core 3.0.0 when 1 million objects are de/serialized. I have grouped them by their serialization format which makes it easier to choose similar breeds if you want to stay at a specific e.g. binary or textual protocol. If you want to profile on your own you can use the supplied SerializerTests\SerializerStack.stacktags file to visualize the performance data directly in CPU Sampling and Context Switch Tracing.

Here the same data with .NET Framework 4.8

In terms of serialized data size there is Bois_LZ4 a special one because it is a compressing serializer with pretty good numbers. Since large parts of my BookShelf data is very similar it can compress the data pretty well which might play a role if network bandwidth is relevant in your scenario and you cannot use http compression.

Measurement Errors

I have collected data over the months also with .NET 4.7.2 where the numbers were much better. It looks like .NET Framework 4.8 (4.8.4010.0) has some regression issue with Xml handling which leads to slowdowns up to 6 times. These manifest in large degradations in DataContract and XmlSerializer based tests. You do not believe me? I have measured this! Unfortunately I have played around a lot with debugging .NET recently (https://aloiskraus.wordpress.com/2019/09/08/how-net-4-8-can-break-your-application/) where I have added .ini files in all of my GAC assemblies to make it easy to debug with full locals. This works great but what will happen when NGen tries to update it NGen images? It will honor the .ini settings which tell it to not inline or optimize code too much. No this is not a regression issue of .NET 4.8 but it was my error by playing around too much with JIT compiler flags. Now I have proof that method inlining is indeed very beneficial especially for Xml parsers with many small methods which speeds up parsing up to a factor 6. There is always something you can learn from an error.

What Is The Theoretical Limit?

To test how fast a serializer can get I have added NopSerializer which tests only deserialization because data is much often read than written and it is the more complex operation. Deserialization consists of two main stages

- Data Parsing

- Object Creation

Because any serializer must parse either Xml, Json or its other data format we can test the theoretical limit by skipping the parsing step and measure only object creation. The code for this is at its core getting the string of a preparsed UTF8 encoded byte array:

// This will test basically the allocation performance of .NET which will when the allocation rate becomes too high put // Thread.Sleeps into the allocation calls. for(int i=0;i<myCount;i++) { books.Add(new Book() { Id = i+1, Title = Encoding.UTF8.GetString(myUtf8Data, (int) myStartIdxAndLength[i*2], (int) myStartIdxAndLength[i*2+1] ) }); }

For 1 million book objects which consist only of an integer and a string of the form “Book dddd” the deserialize times are ca. 0,12s which results in a deserialize performance of ca. 90 MB/s which is not bad. If you look at the data charts above you still find a faster one named ApexSerializer. Why is this one faster than the NopSerializer? Great question! When you parse a text file it is usually stored as ASCII or UTF-8 text. But .NET and Windows uses as internal string representation UTF-16. This means after reading from a text file the data needs to be inflated from ASCII/UTF8 (8 bits per char) to UTF-16 (16 bits per char). This is complex and done in .NET with Encoding.UTF8.GetString. This conversion overhead takes time and has become with .NET Core 3 better which is great because it is a very central method which is called by virtually all code which deals with reading text files. When we use the fastest Json serializer UTf8Json which needs 0,3s then we find that 60% is still spent in parsing Json. This overhead could be lessened by using SimdJsonSharp which claims to give you GB/s Json parsing speed which uses advanced bit fiddling magic and AVX128/256 SIMD instructions which can process up to 64 bytes within one or a few instruction cycles. So far the interop costs of the native version (SimdJsonSharpSerializerN) still outweigh the benefits by far. The managed version does pretty well (SimdJsonSharpSerializer) but it is only a few % faster than Utf8Json which indicates that I am either using the library wrong or that the generated code still needs more fine tuning. But be warned that SimdJsonSharpSerializer/N is NOT a serializer for generic objects. You get from SimdJsonSharp only the Json parser. You need to write the parsing logic by yourself if you want to go this route.

Which One Is Fastest?

Binary

When raw speed is what you want and you accept to keep the serialized object layout fixed (no versioning!) then Apex is worth a look. In the first table I have added a column if the serializer does support versioning in one or the other form. If there is a no expect crashes like ExecutionEngineException, StackoverflowException, AcessViolationException and other non recoverable exceptions if anything in your execution environment changes. If speed is what you want speed is what you get by memcopying the data to the target location.

GroBuf is also worth a look but is comes with a significantly bigger serialized binary blob. Only slightly slower is MessagePackSharp which is written by the same guy as Utf8Json and uses with MessagePack an open source binary encoding scheme.

Text

When the data format is Json then Utf8Json is the fastest one followed by Jil which is only 4% slower in this macro benchmark. If you measure for small objects the overhead with Benchmark.NET then Utf8Json is about 40% faster which is not reflected by this macro benchmark. Benchmark.NET tries to warm up the CPU caches and its branch predictor as much as possible which results in many CPU caching effects. This is great to optimize for small tight loops but if you process larger chunk’s of memory like 35 MB of Json for 1M Book objects you get different numbers because now a significant amount of memory bandwidth cannot be used to eagerly load data into the caches.

The Fastest Is Best Right?

Speed is great until you miss an essential feature of one of the tested serializers. If you are trying to replace something slow but very generic like BinaryFormatter which can serialize virtually anything you have not many options because most other serializer out there will not be able to serialize e.g. Delegates or classes with interfaces with different implementations behind each instance. At that point you are for practical reasons bound to BinaryFormatter. You can try SlimSerializer which tries also be pretty generic at the cost of versioning support as next best option.

Things are easier if you start from DataContractSerializer. You can get pretty far with ProtoBuf which allows easy transformation by supporting the same attributes as DataContractSerializer. But there are some rough edges around interface serialization. You can register only one implementing type for an interface but that should be ok for most cases.

If you are starting from scratch and you want to use Json then go for Utf8Json which is generic and very fast. Also the startup costs are lower than from JIL because Utf8Json and MessagePackSharp (same author) seem to hit a fine spot by having most code already put into the main library and generate only a small amount of code around that. DataContractSerializer generates only the de/serialize code depending on the code path you actually hit on the fly.

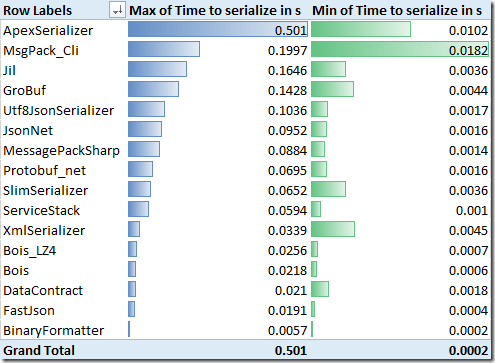

First Time Init Performance (Serialize One Object)

To make the tests comparable a new process is used for every serializer alone to prevent issues like that the first serializer needs to startup the reflection infrastructure which takes time while the later called serializers would benefit from the already warmed up reflection infrastructure. Inside a fresh process four different types are serialized and then the process exits. The measured time is not the executable runtime (.NET Runtime startup is not measured) but how much time each serialize call did need . Below is the Max (first run) serialize time for one small object shown and the min time for any of the other three objects to give you an idea how much time the serializer needs for the first object and how much performance you can expect if you add new types to the mix.

You can execute this also manually by running RunAll.cmd which runs all tests including the startup timing tests in the output folder as csv files. Or if you want to go into details use the command line:

D:\Source\git\SerializerTests\bin\Release\net472>SerializerTests.exe -test firstcall Serializer Objects "Time to serialize in s" "Size in bytes" FileVersion Framework ProjectHome DataFormat FormatDetails Supports Versioning ApexSerializer 1 0.1803 84 1.3.0.0 .NET Framework 4.8.4010.0 https://github.com/dbolin/Apex.Serialization Binary No ApexSerializer 1 0.0331 86 1.3.0.0 .NET Framework 4.8.4010.0 https://github.com/dbolin/Apex.Serialization Binary No ApexSerializer 1 0.0344 86 1.3.0.0 .NET Framework 4.8.4010.0 https://github.com/dbolin/Apex.Serialization Binary No ApexSerializer 1 0.1089 148 1.3.0.0 .NET Framework 4.8.4010.0 https://github.com/dbolin/Apex.Serialization Binary No ServiceStack 1 0.0200 69 5.5.0.0 .NET Framework 4.8.4010.0 https://github.com/ServiceStack/ServiceStack.Text Text Json Yes ServiceStack 1 0.0020 70 5.5.0.0 .NET Framework 4.8.4010.0 https://github.com/ServiceStack/ServiceStack.Text Text Json Yes ServiceStack 1 0.0020 70 5.5.0.0 .NET Framework 4.8.4010.0 https://github.com/ServiceStack/ServiceStack.Text Text Json Yes ServiceStack 1 0.0091 117 5.5.0.0 .NET Framework 4.8.4010.0 https://github.com/ServiceStack/ServiceStack.Text Text Json Yes

…

.NET Framework Everything NGenned

.NET Framework Only Default Assemblies NGenned

.NET Core 3 – Defaults

The pictures show that JIT compilation takes a noticeable amount of time which is usually “fixed” with NGen on .NET Framework or Crossgen on .NET Core. Especially ApexSerializer needs to start 500ms on .NET Core 3 or even 1s on .NET Framework if the assembly and its dependencies are not precompiled. But if you NGen then the times are 10 times better for Apex which is great. If you try to choose the best serializer for reading your configuration values during application startup this metric becomes important because the startup costs of the serializer might be higher than the de/serialize times of your data. Performance is always about using the right tradeoffs at the right places.

Conclusions

Although I have tried my best to get the data right: Do not believe me. Measure for yourself in your environment with a data types representative for your code base. My testing framework can help here by being easily extendable. You can plug in your own data types and let the serializers run. There can be rough spots in some of the serializers how they treat DateTime, TimeSpan, float, double, decimal or other data types. Do your classes have public default ctors? Not all of them support private ctors or you need to add some attribute to your class to make it work. This are still things you need to test. If you find some oddity please leave a comment so we do not fall into the same trap as others. Collecting the data and verifying with ETW Tracing many times that I really measure the right thing was already pretty time consuming. Working around of all the peculiarities of the serializers is left as an exercise to you.

After doing much performance work during my professional life I think I have understood why performance is so hard:

Number of ways to make something slow

Number of ways to make it fastest

When in doubt infinity will win. But even when you have created the fastest possible solution it will not stay there because the load profile will change or your application will run on new hardware with new capabilities. That makes performance a moving target. The time dependency of O(1) makes sure that I am never running out of work ![]() .

.

Great article, lots of information. I liked that tou show data and you explain the tradeoffs one can make when picking serializers. As a junior engineer i did not understand all of it, but it gave me some things to google. I will definitely check in for ‘.NET Serialization Benchmark 2020 Roundup’ ^^

LikeLiked by 1 person

[…] .NET Serialization Benchmark 2019 Roundup by Alois Kraus […]

LikeLike

[…] .NET Serialization Benchmark 2019 Roundup by Alois Kraus […]

LikeLike

The Hyperion issue has since been resolved, hopefully it will find its way back in to the next roundup.

LikeLike

You might also look at Azos.Arow serializer which does versioning and similar to Protobuf

LikeLike

Thanks. I will take a look.

LikeLike

The ZeroFormatter issue on .net core 3 can be resolved by changing one line in dynamicFormatter to call a VirtualFunc instead of a Func to Serialize. Ping me for more details if needed

LikeLike

Can you add https://github.com/Tornhoof/SpanJson to the comparison list, please?

LikeLike

It already is part of the Github project. See https://github.com/Alois-xx/SerializerTests and run the tests for yourself. As usual do not trust the number blindly. Try your specific datatypes and the landscape might be very different. See e.g. this Utf8Json issue where it is over two times slower to serialize byte arrays: https://github.com/neuecc/Utf8Json/issues/177. That can ruin perf for specific use cases where you e.g. send images as byte arrays over the wire and only some prefix data in a Json message.

LikeLike

Note: I am a member of the .NET Core team at Microsoft and work on the System.Text.Json library (above this is labeled as “NetCoreJson”).

Another pass of these benchmarks may be useful. In NET Core 3.1 there were several perf improvements – see https://github.com/dotnet/corefx/pull/41771. Depending on the scenario, serialization should be ~1.2x to ~1.5x faster than 3.0 and deserialization ~1.2x faster than 3.0.

Also, the System.Text.Json serializer also supports a true Stream-based async mode where it does not “drain” the Stream upfront during deserialization (it does not simply convert all the JSON to a byte[] or String and then process it) and during serialization occasionally flushes the underlying Stream.

The performance investment continues into 5.0. Currently, we have significantly improved performance of large collections (CPU and memory savings) and have overall improvements during serialization because of increased performance of the escaping logic.

LikeLike

[…] двоичные сериализаторы. Алоис Краус провел отличное всестороннее тестирование самых популярных сериализаторов .NET, включая […]

LikeLike

[…] .NET Serialization Benchmark – https://aloiskraus.wordpress.com/2019/09/29/net-serialization-benchmark-2019-roundup/X5X* ZString – https://github.com/Cysharp/ZStringX6X* Fast MessagePack Serializer – […]

LikeLike

I love this benchmark, I’ve noticed “NopSerializer” but I can’t find a link to a repository. The source code is in Alois’ github however. where is NopSerialize from ?

LikeLike

You need to read the article then you will find the explanation.

LikeLike

By the way, I forgot to ask : Is it possible to include Protbuf, Capnproto and Flatbuffers ? These may have questionable performances but they are available for multiple langages. Moreover, Protobuf and Flatbuffers are backed by big companies so it means their support won’t drop randomly as many open source lib ( backed by Google and Facebook respectively). Thank you ! 🙂

LikeLike

Protobuf and FlatBuffers are already part of the test suite. CapnProto looks interesting. I will try to add it.

LikeLike

What is NopSerializer exactly?

LikeLike

NopSerializer is just reading the data and creating the data directly. It has zero parsing overhead and is practially an allocation benchmark for the lower limit how fast one can get with .NET. It is not a real serializer you can use somewhere.

LikeLike

Oh, got it. Thanks for a great article by the way. Really helped me make a decision.

LikeLike